A peek at processors in artificial intelligence - Dr. Zhang Xin

2021-01-22

With the rapid development of Artificial Intelligence (AI) and Deep Learning technology, more and more new chip terms, such as GPU, TPU, DPU, NPU, etc. have emerged in people's eyes [1]. What are these? What's it for? How to distinguish?

1. Quoted

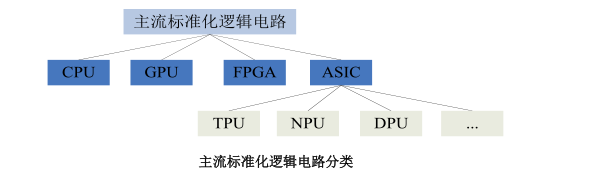

At present, the mainstream standardized logic circuits in the world can be summarized into four kinds:

CPU (Central Processing Unit);

GPU (Graphics Processing Unit);

ASIC (Application Specific Integrated Circuit);

FPGA (Field Programmable Gate Array).

As the core of computing and control of computer system, CPU is the final execution unit of information processing and program running. Since its birth, it has made great progress in logical structure, operating efficiency and functional extension [2]. Artificial intelligence chip mainly includes GPU and can be implemented by FPGA and ASIC, which are semi-customized and full-customized respectively. The application direction of GPU is advanced complex algorithm and general artificial intelligence platform. FPGA, as a kind of semi-custom circuit in ASIC field, not only solves the shortage of custom circuit, but also overcomes the shortcoming of the limited number of gate circuit of the original programmable device. It is more suitable for various specific industries and can realize fast iteration with small cost. ASIC is a full custom chip, because the algorithm complexity is stronger, the need for a special chip architecture corresponding to it. ASICs, which are independently customized based on artificial intelligence algorithms, have a good development prospect. TPU (TensorProcessing Unit), NPU (NeuralNetwork Processing Unit), DPU (Deep learning Processing Unit) and other popular AI chips belong to ASICs. ASIC is equivalent to solidifying software into hardware, making the hardware naturally suitable for a certain kind of computing. What's more, the brain-like chip is the ultimate development model of artificial intelligence, but it is still far from industrialization. [3]

2. Processor development context and architecture features

2.1 the CPU

The CPU is the "brain" of the machine, as well as the "general commander" of layout planning, giving orders, and controlling actions.

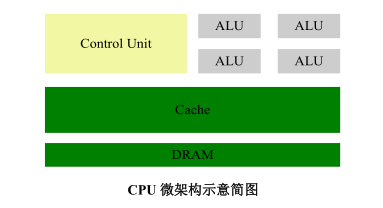

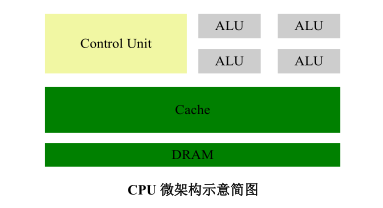

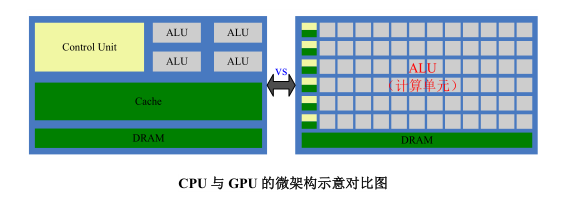

The structure of CPU mainly includes ALU (Arithmetic and Logic Unit), Control Unit (CU, Control Unit), Register, Cache, and the data, Control and state bus that communicate among them. In short, it is: computing unit, control unit and storage unit. The schematic diagram is as follows:

In the figure, DRAM stands for Dynamic Random Access Memory. From the literal meaning is also easy to understand, the cell mainly performs arithmetic operations, shift operations, and address operations and translation; The storage unit is mainly used to store the data and instructions generated in the operation. The control unit decodes the instructions and sends out control signals for each operation to be performed to complete each instruction. Therefore, the execution process of an instruction in the CPU is as follows: after the instruction is read, it is sent to the controller (yellow area) through the instruction bus for decoding, and the corresponding operation control signal is issued; Then the arithmetic unit (gray area) calculates the data according to the operation instructions, and stores the resulting data through the data bus into the data cache (large green area).

CPU follows the von neumann architecture, its core is: store programs, sequential execution. As you can see from the CPU microarchitecture schematic diagram, the gray area responsible for computation is relatively small, while the green Cache and yellow CU take up a relatively large amount of space. Because the CPU architecture requires a large amount of space for storage units (green) and control units (yellow), compared to computing units (gray), it is extremely limited in its massively parallel computing power and is better at logical control. In addition, because it follows the von neumann architecture (storing programs, executing sequentially), the CPU is like a disciplined steward, always doing what people tell it to do step by step. But as the demand for bigger and faster processing grew, the steward began to struggle. So, people thought, can you put multiple processors on the same chip, let them do things together, so don't you improve efficiency? Thus, the GPU was born.

2.2 the GPU

Before we talk about GPUs, let's talk about one of the concepts mentioned above -- parallel computing.

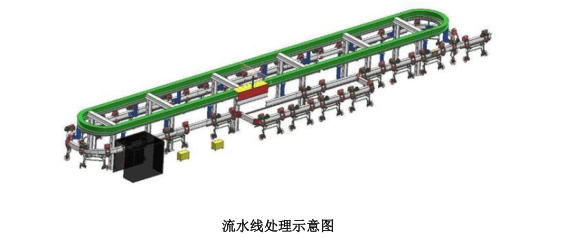

Parallel Computing refers to the process of solving Computing problems by using multiple Computing resources at the same time. It is an effective means to improve the Computing speed and processing power of computer systems. Its basic idea is to use multiple processors to solve the same problem, that is, the problem to be solved is decomposed into several parts, each part by an independent processor to compute in parallel. Pipeline processing diagram

Parallel computing can be divided into parallel computing in time and parallel computing in space. Parallel time refers to assembly-line technology, such as welding, stamping, painting, powertrain, and so on. Without assembly line production, one car has to complete all the operations before the next one can be produced, which is inefficient. With assembly line technology, more than one car can be produced simultaneously. This is the temporal parallelism of parallel algorithms. Two or more operations can be started at the same time, which can greatly improve computing performance. Spatial parallelism refers to the concurrent execution of computations by multiple processors, that is, connecting two or more processors through a network to simultaneously compute different parts of the same task, or large and complex problems that cannot be solved by a single processor. For example, Xiao Zhang needs to empty his four ponds, but it takes 8 hours to use one pump, while it only takes 2 hours to use four pumps to pump all four ponds simultaneously. This is the spatial parallelism in the parallel algorithm. Split a large task into multiple identical subtasks to speed up problem solving. So if the CPU were to do the pumping, it would do it one by one, but if the GPU were to do the pumping, it would be like having several pumps pumping at the same time. GPUs were originally microprocessors used to perform graphing computations on personal computers, workstations, game consoles and some mobile devices (such as tablets and smartphones). Why are GPUs so good at processing image data? This is because every pixel on the image needs to be processed, and the processing process and way of each pixel are very similar, which becomes the natural hotbed of GPU. The simple architecture of GPU is shown in the figure below:

From the architecture diagram, we can clearly see that GPU is relatively simple in composition, with a large number of computing units and a long pipeline, which is particularly suitable for processing a large number of unified data types. But the GPU can't work alone, it has to be controlled by the CPU to work. CPU can act independently to process complex logical operations and different data types, but when a large amount of data of the same type needs to be processed, GPU can be called for parallel computing.

There are a lot of ALUs and a little Cache Cache in the GPU. The purpose of the Cache is not to store data that needs to be accessed later, which is different from the CPU, but to serve the thread. If multiple threads need to access the same data, the cache consolidates those accesses and then accesses DRAM. If you compare the CPU and GPU on the same graph, it is very clear:

Most GPU work is computationally heavy, low-tech, and repetitive many, many times. Although GPUs were created for image processing and are known for their gaming capabilities, they are also becoming increasingly popular for scientific computing, cryptography, massive data processing and other applications, and can be considered as a more versatile chip. Some of the most exciting applications of GPU technology include artificial intelligence and machine learning. Because GPUs contain so much computing power, they can take advantage of GPUs' highly parallel features, such as image recognition, to provide incredible acceleration in terms of workloads. Many of today's deep learning technologies rely on GPUs working together with CPUs.

2.3 TPU

Both CPU and GPU are relatively general chips. As people's computing needs become more and more professional, people hope to have chips that can better meet their professional needs. At this time, the concept of ASIC (Special Purpose Integrated Circuit) came into being.

ASIC refers to the special specifications of integrated circuits customized according to different product requirements, designed and manufactured by specific user requirements and specific electronic system requirements. Because ASICs are "focused" and only do one thing, they can do it better than CPUs, GPUs, and other chips that can do many things, achieving higher processing speeds and lower power consumption. However, the production cost of ASICs is also very high. And TPU (TensorProcessing Unit, Tensor Processor) is a chip specially developed by Google to accelerate the computing power of deep neural network. In fact, it is also an ASIC.

So many machine learning and image processing algorithms mostly run on the GPU and the FPGA (half a customized chips), but both chip is a kind of universal chip, so on the efficiency and power consumption still not closer adaptation of machine learning algorithms, and Google has been firmly believe great software will more shine with the help of the great hardware, so Google will want to, we can make a special chip machine machine learning algorithms, so the TPU was born.

TPUs are said to offer 15-30 times better performance and 30-80 times better efficiency (performance per watt) than comparable CPUs and GPUs. The original TPU could only do reasoning, relying on the Google cloud to collect data and produce results in real time, and the training process required additional resources; The second generation TPU can be used for both training neural networks and reasoning. Why is TPU so superior in performance?

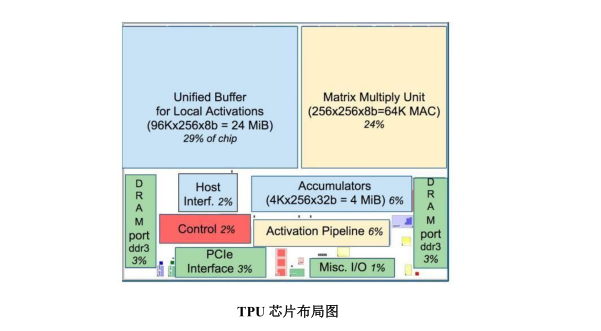

As shown in the figure, the TPU uses up to 24MB of local memory, 6MB of accumulator memory, and memory for docking with the master processor on the chip, which accounts for 37% of the chip area (shown in blue). This means that Google is fully aware of the fact that off-chip memory access is the main culprit of the GPU's low power ratio, so it puts a lot of memory on the chip regardless of the cost. By contrast, Nvidia's K80 at the same time had only 8MB of on-chip memory, so it needed constant access to off-chip DRAM. In addition, the high performance of TPU also comes from the tolerance of low computing accuracy. The results show that the algorithm accuracy loss caused by low precision operation is very small, but in the hardware implementation can bring great convenience, including lower power consumption, faster, occupy a smaller chip area of computing units, smaller memory bandwidth requirements, etc., TPU adopts 8 bit low precision operation.

2.4 NPU

NPU (Neural Network Processing Unit), that is, Neural network processor. As the name suggests, the processor is designed to simulate the structure of human neurons and synapses with circuits. How to imitate? That starts with human neural structure -- biological neural networks are made up of artificial neurons connected by pairs of synapses, which record the connections between neurons.

If you want to mimic a human neuron with a circuit, you have to abstract each neuron into an excitation function whose input is determined by the output of the connected neuron and the synapse that connects the neuron. In order to express specific knowledge, users usually need to adjust the value of synapses and the topology of network in artificial neural network (ANN). This process is called "learning." After learning, the artificial neural network can use the acquired knowledge to solve a specific problem.

Then don't know if you have found the problem, it turns out that due to the deep learning is the basic operation of the processing of neurons and synapses, and the traditional instruction set processor (including x86 and ARM, etc.) is developed for general computing, its basic operation for) (addition, subtraction, multiplication, and division arithmetic operation and logical operation (and or not), often require hundreds or even thousands of instructions to complete the processing of a neuron, the processing of deep learning efficiency is not high. Then you have to do something different -- break away from the classic Von Neumann structure! Storage and processing are integrated in neural networks, which are represented by synaptic weights. In the von Neumann architecture, storage and processing are separate, implemented by memory and arithmetic respectively, and there is a big difference between the two. When the existing classical computers based on von Neumann architecture (such as X86 processors and Nvidia GPUs) are used for neural network applications, they are inevitably constrained by the discrete structure of storage and processing, thus affecting the efficiency. This is one of the reasons that specialized chips for artificial intelligence have certain innate advantages over traditional chips.

Taking China's Cambrian as an example (the typical domestic representative of NPU), Diannaoyu instruction directly faces the processing of large-scale neurons and synapses. A single instruction can complete the processing of a group of neurons, and provides a series of specialized support for the transmission of neuron and synaptic data on the chip. In terms of numbers, CPUs, GPUs and NPUs will have more than a hundfold performance or energy consumption ratio gap. Take Diannao paper published by Cambrian team and INRIA in the past as an example. Diannao is a single-core processor with a dominant frequency of 0.98GHz, peak performance of 452 billion basic neural network operations per second, power consumption of 0.485W and area of 3.02 square millimeters at 65nm process.

2.5 HPU

The Holographic Processing Unit (HPU) is a coprocessor chip that works with a CPU and a GPU. It is integrated into Microsoft's HoloLens, a mixed-reality headset developed by Microsoft, which allows HoloLens to superimpose virtual holographic objects (augmented reality) on reality. The processor is provided to devices such as HoloLens to determine the current user's range of vision, map the surrounding environment, and process body posture and voice commands [5].

3. Looking forward to

The current software algorithms have been optimized well enough, but the hardware seems to be a little behind the pace of big data processing and AI applications in the information age. However, due to the insufficient computing power of the traditional CPU, it is an inevitable trend to develop new chip architecture to support the application of artificial intelligence. At present, there are two development paths for AI-oriented hardware optimization and upgrading: (1) Continuing the traditional computing architecture and accelerating the computing capacity of hardware: represented by GPU, FPGA, ASIC (TPU, NPU, HPU, etc.) chips, these exclusive chips are used as assistants to carry out various computations related to artificial intelligence with the control of CPU; (2) To completely overturn the traditional computing architecture and improve computing power by simulating the structure of human brain neurons. Due to the limitations of technology and underlying hardware, the latter approach is only in the early stage of research and development and has no possibility of large-scale commercial application at present.

Table of Reference:

[1] https://www.icinsights.com/

[2] https://baike.baidu.com/item/CPU

[3] https://www.zhihu.com/question/294757410/answer/1539569407

[4] http://www.pc6.com/infoview/Article_95570.html

[5] https://baike.baidu.com/item/全息处理器