The combination of virtual reality and brain-computer interface and its application - Dr. Peng Fulai

2021-01-22

1. The introduction

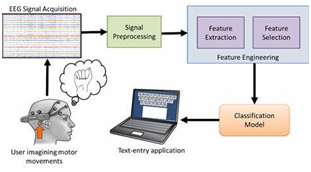

With the continuous development of Virtual Reality (VR) and Brain-Computer Interface (BCI) technology, the way humans interact with the world is changing quietly. By combining virtual reality and brain-computer interface, the two technologies can realize their complementary advantages (Figure 1), and it is also a new application mode recently emerged in the fields of medical rehabilitation, multimedia and entertainment, with broad application prospects.

Virtual reality technology is to build a three-dimensional space model by computer to generate a comprehensive perceptible virtual world with visual perception as the main, auditory and tactile experience as the auxiliary, so that users have a feeling of immersion in this environment, and can interact with the surrounding environment and things. Virtual reality has the following three characteristics: (1) Immersion: refers to the user can place in the virtual environment, feel surrounded by the virtual world, give a person the feeling of immersive; (2) Interactivity, which means that users can interact naturally with things in the virtual environment; (3) Imagination. It means that virtual reality can not only reproduce the real world, but also break through the real environment, create a brand new world with people's Imagination and broaden people's cognitive range.

At present, the interaction between human and virtual environment is mainly carried out by mouse, keyboard, joystick, etc., and the interaction mode is relatively limited, which hinders the immersive experience of virtual reality. If a new interaction mode can be developed, the immersive sense of users can be further enhanced [1].

Figure 1. Virtual reality and brain-computer interface

BCI is a direct connection pathway established between human brain and external devices, which is independent of conventional brain information output pathways (such as peripheral nerve and muscle tissue), and is a brand new external information exchange and control technology [2]. Brain-computer interface technology can obtain EEG signals, conduct a series of operations on EEG signals such as denoising, feature extraction, classification and recognition, identify the brain's intention, and finally convert this idea into control instructions of peripherals, so as to realize the function of directly controlling external devices through the brain, as shown in Figure 2. Brain-machine interface for EEG signal is mainly divided into two kinds of invasive and noninvasive way, need to have a way through the surgical operation microelectrode sensors implanted into the brain cortex, this way to avoid the skull on brain electrical signal attenuation, obtaining high quality of EEG signals, but the operation risk is higher, ordinary people can not accept; The non-invasive method is achieved by collecting EEG signals from the scalp. Although the quality of the collected EEG signals is relatively poor, it is safe, portable and highly acceptable without operation, which is the mainstream method at present.

At present, some progress has been made in the application of BCI in the field of medical rehabilitation. For example, BCI can help stroke patients rebuild limb motor function or provide a new type of auxiliary motor function to help disabled people control artificial limbs, wheelchairs, spelling and typing, online shopping, etc. [3]. However, the current BCI still faces a problem that it cannot provide effective visual feedback, leading to poor training effect of subjects [4].

By combining brain-machine interface and virtual reality can solve the above problem: on the one hand, the virtual reality technology can be used as a brain-computer interface system of information feedback tools compared with the traditional brain-machine interface feedback model, virtual reality can offer brain-machine interface more real, more active, more rich incentive situation feedback; On the other hand, brain-computer interfaces can be used as input devices of virtual reality. Compared with traditional interaction methods such as mouse and joys-stick, brain-computer interfaces can provide more direct and fast input for virtual reality systems, making virtual reality more immersive experience [2].

Figure 2. Schematic diagram of brain-computer interface technology

2. Composition of BCI-VR system

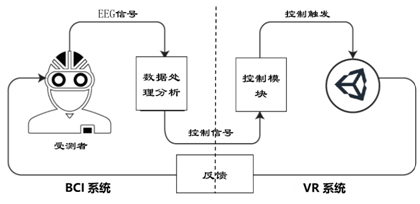

BCI-VR system was first proposed by Bayliss et al in 2000. BCI-VR is a typical closed-loop working system, which uses feedback to connect the output with the input, and informs the participants whether the intended behavior is correct through feedback. BCI-VR system mainly includes BCI system, VR system and communication interface between the two systems.

BCI system: BCI consists of EEG signal acquisition module and EEG signal intelligent analysis software. EEG signal acquisition module collects scalp and brain electrical signals of subjects in a non-invasive way. The number of channels and electrode positions of the acquisition module will be adjusted according to different applications. EEG signal intelligent analysis software is responsible for a series of processing of EEG signal, such as denoising, feature extraction, feature selection, classification of thinking state, etc., to generate control commands for external devices.

VR system: VR is used to simulate and display a virtual world, provide real-time situational feedback to users and respond to control commands from BCI system in a timely manner.

Communication interface: the communication interface is responsible for the real-time communication between the BCI system and the VR system. On the one hand, the control commands generated by the BCI system are transmitted to the VR system through the communication interface; on the other hand, the feedback system generated by the VR system is fed back to the tested person through the communication interface.

In the BCI-VR system, BCI as an input device is mainly responsible for providing instructions. Through the BCI system, users can simply, efficiently, directly and easily output these instructions through their brain activities, and then send them to the VR system. VR system is mainly responsible for sensory stimulation and feedback tasks, and can display sensory stimulation required by BCI, induce physiological signals required by BCI as soon as possible, and provide meaningful feedback tasks enabling users to control BCI [2].

Figure 3 shows the workflow of the BCI-VR system

Figure 3. BCI-VR system working flow chart

3. Application of BCI-VR system

At present, the application of BCI-VR system can be divided into three types according to the type of BCI system: Motor Imagery (MI), Steady State Visual Evoked (SSVEP) and Event Related potentials (ERP) monitoring.

3.1 Application of BCI-VR system based on MI

A large number of studies have shown that event-related desynchronization (ERD) and event-related synchronization (ERS) are favorable means for studying EEG signals such as body movement and language function [5]. Pfurtscheller et al. [6] demonstrated in 1999 that the amplitudes of α (8-12 Hz) and β (13-30 Hz) bands in the contralateral sensorimotor cortical EEG signals would decrease during the preparation and performance of unilateral hand, that is, the amplitudes of the activated cortical EEG signals would decrease, which is called ERD. The amplitude of α band (8~12 Hz) and β band (13~30 Hz) in the ipsilateral cortical signals of the exercising hand will increase, that is, the EEG amplitude will increase in the resting state, this phenomenon is called ERS. ERD/ERS is a signal that appears in a specific frequency band of motor and sensory cortex. ERD/ERS phenomenon also exists in pre-motor conditions, i.e., motor imagination. Therefore, the part of the imagined motion can be distinguished by detecting and extracting the corresponding ERD/ERS features.

Martini et al. [7] to imagine rehabilitation training, left arm paralytic patients by imagining left hand grasp motion, BCI system access to the relevant brain electrical information, and then the eeg signals processing recognition, and transmitted to the first perspective in VR system shows that virtual left arm to perform the corresponding action, and the tactile stimuli feedback with the bracelet, nature makes the whole control process is clear, as shown in figure 4.

Fig. 4. Remaining limb motion imagination based on BCI-VR system

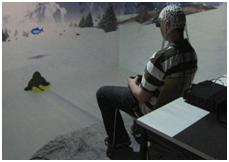

Leeb et al. [8] set up a bar scene in the virtual environment (Figure 5), so that the user felt that he was in the bar bar and realized the rotation control of the scene to the left or right by imagining the movement of his left and right hands. At the same time, they designed to control a person walking on a virtual street by imagining foot movements. Later, the team also designed a virtual multiple input control control system [9], the fusion of BCI and handle the penguins glide in the snowy mountains to manipulate the VR system, by BCI control penguin jumps (figure 6), a controller to control the movement direction of the penguins, the study by BCI and handle a variety of input methods to control the VR system, enhance the user immersive, the experimental effect is better.

Figure 5. Motion Imagination Control VR System - Penguin Motion Control

Po T Wang et al. [10] designed an online BCI system for motion imagination based on 3D visual feedback. The subject was asked to walk in a straight line in the scene, and to control the walking or stopping of the characters representing himself in the scene by imagining the footsteps moving or stopping. There were ten characters separated by a certain distance on the straight path. The subject was asked to control the characters representing himself to walk to the neighborhood of each character in turn, give a brief greeting and then leave. Repeat the above tasks, so that the subjects get repeated training. The BCI system uses off-line data processing to extract the features of foot motion and resting state EEG data and classify the movements, so as to realize the output of step and stop commands. The classifier was then fed into an online BCI system that incorporated 3D visual feedback scenes. Through the comparative experiment, the subjects showed a good control effect, which proved that it was possible for the subjects to use action imagination BCI to achieve control in the virtual reality scene.

Motion through the imagination to realize classification instruction at present is less, therefore, some researchers through the way of combining with other signals to improve, increase the number of instructions, such as Scherer et al. [11] design can realize the three independent control instruction of BCI - VR system, users can imagine the movement of left hand, right hand or foot to realize turn left, turn right, or move on.

Through the above research, it can be seen that the BCI-VR system can achieve the functions of action imagination training, feedback, control, etc. Compared with the traditional simple color bar feedback, it has a more effective visual feedback effect and a good sense of immersion, which greatly improves the working efficiency of online control action imagination BCI system.

3.2 Application of BCI-VR system based on SSVEP

When the human brain to accept to the fixed frequency (generally more than 6 Hz) twinkle visual stimulus, the brain's visual cortex can detect brain signals of the same frequency, the signal collecting pillow in eeg signals can be obtained, compared with other brain electric signal, the BCI based on SSVEP usually have higher information transmission efficiency, less training and other advantages, SSVEP is the most commonly used in BCI systems, the eeg signals.

LaBablor et al. [12] used SSVEP signals to control a character in a 3D virtual game along a rope from one platform to another. In order to keep the balance during the walking process, the user needs to control through the BCI system. On both sides of the BCI screen, squares with different steady-state frequencies were flashed to induce SSVEP signals of different frequencies. The user can control the left and right balance of characters in the game by looking at the flickering squares on the left or right and identifying the left and right control commands by extracting the SSVEP signal. Touyama et al. [13] attempted to use SSVEP to control rapid turning in a cave virtual environment. Through experiments, subjects could successfully navigate through a dark and curved cave by changing the visual field orientation (to the left or right) on the SSVEP/cave interface physically.

Through the above work, it can be seen that the BCI-VR system based on SSVEP can realize the efficient control of objects in the virtual environment, which proves the effectiveness of the system. However, in the control process, the subject needs to keep staring at the display screen with visual stimulation, which is inconsistent with the practical application scene and causes inconvenience to the practical application. In order to solve this problem, researchers proposed to integrate visual stimulus information into the virtual scene. The subjects only need to pay attention to the things in the virtual scene, which greatly improves the user experience and immersion.

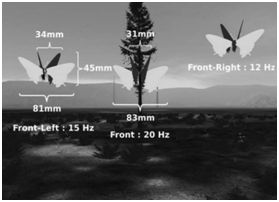

Legeny et al. [14] used navigation butterflies in a virtual forest to display the flicker stimuli required for SSVEP generation on the butterfly wings (Figure 7). The three butterflies are displayed on the screen, flying up and down in front of the user, the user through the focus is on the left, middle, or on the right side of the butterfly, flying butterflies, forward, left to right, at the same time, the butterfly tentacles are also used to provide feedback to the user, the larger the antenna spacing, the more likely is the direction of movement of the user's attention and be selected and the classifier. Such SSVEP stimuli are more naturally incorporated into the virtual environment, and it can be seen through experiments that this approach does increase the subjective initiative preference.

Figure 7. SSVEP Control VR System - Butterfly Motion Control

Pieces et al. [15] designed a game in desktop virtual environment, the user through three different frequency flicker stimulation to operate the virtual characters walking, flashing stimulus square will always follow the virtual person move, three different flicker stimulation respectively in the virtual three characters of the left hand, right hand and head position, selected by the user to look at different flicker stimulation to complete virtual character in the direction of the control. Through the experiment, five of the seven participants successfully completed the task.

The above research shows that BCI-VR system can provide more abundant, real and intuitive interaction mode for BCI of SSVEP, realize efficient control, and improve the practicability of BCI based on SSVEP.

3.3 Application of ERP based BCI-VR system

Eeg signals based on ERP is the most commonly used for P300, in P300 based brain-computer interface, the user needs to focus on a random, small probability of stimulation, each stimulation corresponding to a given command, due to the expected stimulus is rare and events related to the emergence of, in 300 ms after the user's brain activity will appear a P300 pulse response.

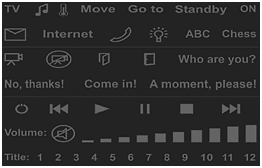

In 2000, Bayliss et al. combined P300 BCI with virtual reality for the first time, and designed the scene where the subjects drove in the VR environment. When they encountered a red light, the subjects would generate P300 signal, and by detecting this signal, they could realize the control of parking. In 2003 [16], the same research group used P300 in the interactive scene of virtual smart home in human-HMD. Users could control different home devices (such as TV, lamp, etc.) through 3D spheres. The 3D spheres appeared randomly on the devices and could switch and control the home by counting The Times of the appearance of the 3D spheres.

Groenegress et al. [17] realized the control of virtual reality smart home based on the P300 BCI-VR system (Fig. 8), and generated the smart home simulation environment through VR, including living room, kitchen, bedroom, bathroom, etc. Each room has several devices that can be controlled, including TV, telephone, lamp, door, etc. By arranging different control commands on the control menu. The device identifier on the menu flashes constantly, and the user can open the device to control by looking at the device identifier on the menu. In this system, the accuracy of task execution can reach 87%~100%. The experiment further proves the effectiveness of the event-induced BCI-VR system.

Figure 8 Intelligent home control based on P300 BCI-VR system

4. Summary and outlook

By combining BCI and VR technology, the two technologies can complement each other's advantages. On the one hand, VR technology can be used as the information feedback tool of BCI system, providing more real and rich, more active and more encouraging situational feedback. On the other hand, brain-computer interfaces can be used as input devices of virtual reality to provide more direct and fast input and make the immersive experience of virtual reality stronger. BCI-VR system has a broad application prospect in the fields of medical rehabilitation, game entertainment, smart home, etc., but it still faces some difficulties in the practical application process:

(1) As the input device of VR system, BCI needs to provide accurate, stable, efficient, direct and flexible control instructions for VR system, so as to improve user immersion. To this end, BCI needs to meet the following requirements: (1) provide diversified input instructions for VR; (2) According to the user's thinking, accurately identify the control command; (3) anytime, anywhere, at will to issue control orders. At present, BCI technology is not able to meet the above requirements. Firstly, EEG signals are very weak and vulnerable to interference, and the signal quality cannot be guaranteed in complex scenes, which brings challenges for subsequent EEG signal processing and analysis. On the other hand, the intention recognition based on EEG signals is currently limited in categories, such as action imagination, which can only stay in the granularity of left and right hand imagination, and cannot meet the needs of diversified instruction output.

(2) There are also many challenges in the design and presentation of virtual reality scenes [2] : (1) Provide real and rich VR feedback to users to ensure the authenticity of BCI manipulation; (2) The presented virtual environment must be able to closely and uninterruptedly integrate and integrate various stimulation demands of BCI to induce thinking and EEG, and ensure the authenticity and credibility of virtual environment scenes as far as possible so that users can maintain a deep sense of immersive virtual environment without being interrupted or damaged.

In short, although the combination of virtual reality and brain-computer interface still faces many difficulties in practical application, it is believed that with the continuous development of computer information technology, many technical bottlenecks will continue to be overcome, and BCI-VR system will continue to improve, and the application prospect will become more and more bright.

reference

[1] O 'Doherty, J. E., Lebedev, M. A., Ifft, P. J., Zhuang, K. Z., Shokur,S., Bleuler, H., & Nicolelis, Zhuang, Zhuang. M. A. Active tactile exploration enabled by a brain-machine-brain interface. Nature. 2011. 479(7372), 228.

[2] Kong Liwen, Xue Zhaojun, Chen Long, He Feng, Qi Hongzhi, Wan Baikun, Ming Dong. Research progress of brain-computer interface based on virtual reality environment. Electronic Measurement & Instrumentation, 2015. 29(3):317-327.

[3] Bockbrader MA, Francisco G, Lee R, Olson J, Solinsky R, Boninger ML. Brain Computer Interfaces in Rehabilitation Medicine. PM R. 2018. 10(9 Suppl 2):S233-S243.

[4] Ma Zhao, Wang Yijun, Gao Xiaorong, Gao Shangkai. Virtual reality rehabilitation training platform based on brain-computer interface technology. Chinese Journal of Biomedical Engineering. 2007. 26(3):373-378.

[5] Zhou Sijie, Bai Hongmin. Research progress of event-related desynchronization and synchronization methods in EEG signal analysis. Chinese Journal of Microinvasive Neurosurgery.2018. 3(20):141-143.

[6] PFURTSCHELLER G, ANDREW C. Event-Related changesof band power and coherence: method-ologyand interpretation. J Clin Neurophysiol, 1999, 16(6): 512-519.

[7] Martini M, Perez-Marcos D, Sanchez-Vives MV. Modulation of pain threshold by virtual body ownership. Eur J Pain. 2014. 18: 1040-1048.

[8] Leeb, R;Keinrath, C;Friedman, D;Guger, C;Scherer, R;Neuper, C;Garau, M;Antley, A;Steed, A;Slater, M. Walking by thinking: The brainwaves are crucial, not the muscles!. Presence: Teleoperators and Vir tual Environments. 2006.15(5):500-514.

[9] Leeb R, Lancelle M, Kaiser V et al. Thinking penguin: multimodal brain-computer interface control of a R game. IEEE Trans Comput Intell AI Games.2013. 5:117-128.

[10] Wang P T, King C E, Chui L A, et al. BCI controllerd walking simulator for a BCI driven FES device. Proceedings of RESNA anniversary conference. 2010.

[11]Scherer R, Lee F, Schlogl A, et al. Toward self-paced braind-computer communication: navigation through virtual worlds. IEEE Transactions on Bio-medical Engineering. 2008. 55(2): 675-682.

[12] Lalor E, Kelly SP, Finucane C, Burke R. Steady-State VEP-Based Brain-Computer Interface Control in an Immersive 3D Gaming Environment. EURASIP Journal on Advances in Signal Processing. 2005.3156-3164.

[13] Touyama H. Brain-CAVE interface based on steady state visual evoked potential. Advances in Human Computer Interaction. 2008. 437-450.

[14] Legeny J, Abad RV, L ecuyer A (2011). Navigating in virtual worlds using a self-paced SSVEP-based brain–computer interface with integrated stimulation and real-time feedback. Presence Teleop Virt 20: 529–544.

[15] J Faller,Gernot Mueller-Putz,D Schmalstieg,G Pfurtscheller. An application framework for controlling an avatar in a desktop-based virtual environment via a software SSVEP brain-computer interface. Presence: Teleoperators and Virtual Environments. 2010. 19(1):25-34.

[16] Bayliss JD. Use of the evoked potential P3 component for control in a virtual apartment. IEEE Trans Neural Syst Rehabil Eng. 2003.11: 113-116.

[17] Groenegress C, Holzner C, Guger C et al. Effects of P300-based BCI use on reported presence in a virtual environment. Presence. 2010. 19: 1–11.