Light field display technology - Dr. Du Junfan

2020-12-16

Summary

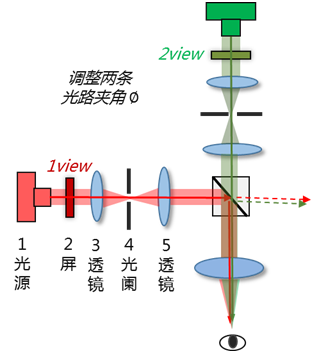

Since the commercialization of glasses-based stereoscopic display technology, but not recognized by the public, naked-eye stereoscopic display technology has been continuously improving the 3D effect according to the principle of binocular parallax, but the viewing zone is discrete, the viewing angle is narrow, and the human visual convergence adjustment conflict (VAC ) Has never been resolved, both of these technologies are far from satisfactory. With the increase in screen resolution, the current stereoscopic display technology cannot meet the public’s demand for restoring the real world. People have proposed light field display technology to achieve the goal of realizing the formation of real image points in space, which can provide the observer with a light field and A display technology that forms a natural and comfortable 3D scene while eliminating possible information conflicts. Not only can it provide multiple views, it can provide both eyes with parallax images that can produce correct retinal blur according to the different viewing positions, but also provide multiple depths of field to place the displayed content in the corresponding 3D space position on the hardware. This kind of technology is called true light field, and the effect is not ideal enough, and there is still a long way to realize productization. So currently, Super Multi View (SMV) light field technology is used as an intermediate transition to meet the needs of the naked eye 3D market.

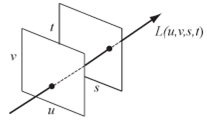

Since the demo of light field display was unveiled at major exhibitions, many consumers have been confused about what is light field display and what is the difference between light field display and naked-eye stereoscopic display. "Light field" refers to the distribution of a certain physical quantity of light in space. It is the sum of all light information in space, including the position and direction of light, intensity, color, and flicker. This concept was first clearly proposed by A. Gershun in 1939, and was later perfected by E. H. Adelson and J. R. Bergen, giving the form of the Plenoptic Function. Simply put, the light field describes the intensity of light from any point in space in any direction. The plenoptic function that completely describes the light field is a 7-dimensional function, including the position of any point (x, y, z), any direction (θ, Φ in polar coordinates), wavelength (λ) and time (t); Later, the wavelength (λ) and time (t) are expressed by RGB pixels and frequencies, so the light field is reduced from 7-dimensional to 5-dimensional functions (x, y, z, θ, Φ); currently, two planes are used Two points represent the light passing through, and the direction and position of the light can be determined. The dimension is reduced from 5 dimensions to 4 dimension functions (u, v, s, t), which simplifies the amount of data from 7 to 4 dimensions. Light field shooting needs to record all light information, and light field display needs to reproduce all light information completely and accurately.

Figure 1. Four-dimensional light field

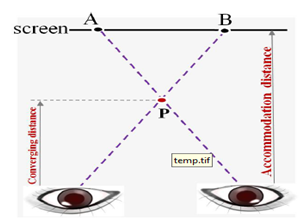

The stereoscopic display technologies that have appeared on the market at present are all based on the principle of binocular parallax, so there is a visual convergence adjustment conflict (VAC), which is more or less dizzy. The goal of light field display is to be able to provide the observer Display technology that provides a light field and forms a natural and comfortable 3D scene, while eliminating possible information conflicts. Light field display includes two basic elements. One is to provide multi-views, which can provide correct retinal blur parallax images for both eyes according to the different viewing positions. The displayed content is placed in the corresponding 3D space position.

Binocular parallax 3D has conflicts of visual convergence adjustment

True light field 3D has no visual convergence conflicts

Figure 2. The difference between binocular parallax and true light field 3D principle

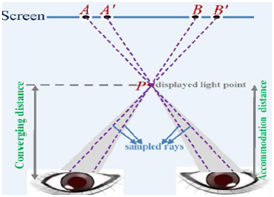

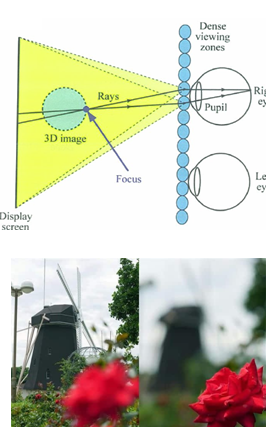

There are currently two main types of light field display technology, true light field and Super Multi View (SMV) light field. The true light field forms real image points on different depths of field in space. The human eye sees the image formed by real image points in space. The physiological factors of human eyes viewing three-dimensional, will not produce VAC, human eyes focus on the foreground, the background is blurred, human eyes focus on the background, the foreground is blurred, which is consistent with the human eye viewing the real world, at least 2 points of view into the pupil In addition, the sub-pixel light is collimated, and the 3D viewing angle is very narrow; Super Multi View (SMV) light field is the intermediate state of binocular parallax and true light field technology. In order to increase the 3D viewing angle, a total of about 30 viewpoints are designed, each The image contains different parallax information. People can see information in different directions of the object in the process of moving and watching. For example, the effect of moving the object in the real world is the same. A single eye can receive many viewpoints at the same time. At present, there are about six or seven points. The parallax subdivision of the images of each viewpoint makes people move and watch smoothly without jumping. The parallax subdivision can weaken the visual convergence adjustment conflict (VAC) of the human eye. Although the information of different depths can be seen, it is actually the principle of binocular parallax. It's just that the parallax is subdivided and weakened.

Figure 3. Real light field display effect (real image point)

Figure 4. Super Multi View light field effect (multi-direction)

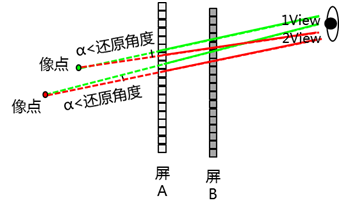

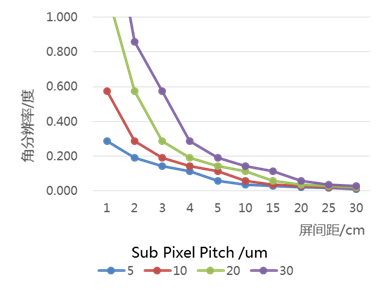

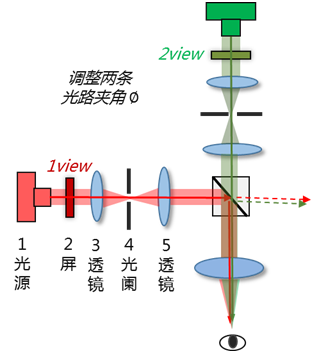

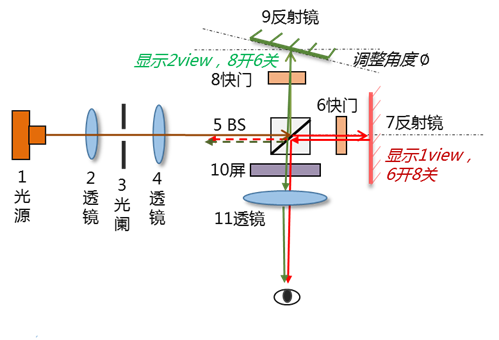

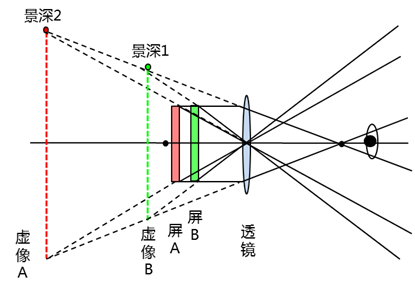

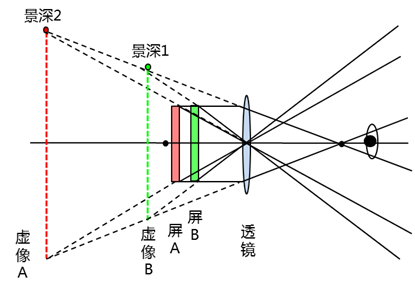

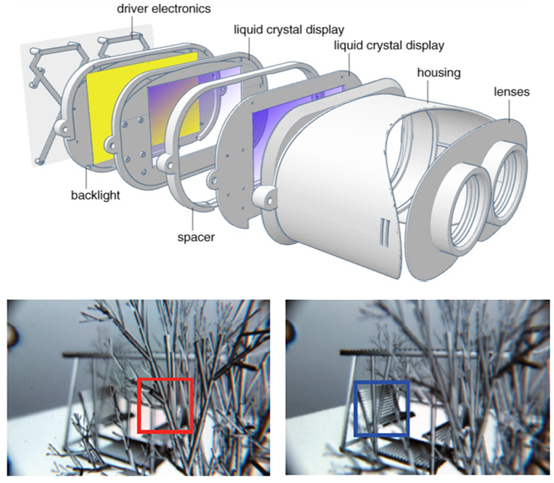

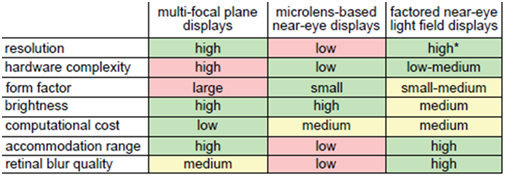

There are two ways to realize true light field display technology, one is to restore the angle, and the other is to restore the object point. Reduction angle method, typical structures include a laminated screen and a microlens array, the sub-pixels are collimated, the human eye has at least two eyesights, and the entrance pupil rays of these two eyesights cannot interfere with each other. Different depth positions in space It can be traced to the image point, and the light angle of the virtual object point is fully restored. The imaging effect is limited by the diffraction of the pixel opening, the light collimation, and the number of monocular viewpoints. As shown in Figure 6, the larger the screen spacing, the smaller the sub-pixels. The smaller the angular resolution that can be restored is, the more sufficient the light field is restored, the more the monocular view points, the more depth of field, the high resolution of the laminated screen structure, the low display brightness, the complex light field rendering algorithm, and the resolution of the microlens array structure Low rate, high display brightness, and large crosstalk; the true light field effect achieved by the reduction angle method can be verified by the following two experiments, as shown in the experiment in Figure 7, screen 1 displays 1 viewpoint image, screen 2 displays 2 viewpoint images, and the two images are The light enters the human eye at a small angle, and the effect of the true light field can be seen; in the experiment shown in Figure 8, the angle of the mirror is adjusted, and the screen displays the images of 1 viewpoint and 2 viewpoints in time sharing, and the persistence of vision principle does not reduce the resolution. The way to verify the reduction angle method to achieve the effect of real light field display. The second method of real light field display technology is the reduction of the object point method, which uses the structure of multi-layer screens, as well as the rotating scattering screen (scanning type) and the projector array. The latter two mechanical structures are huge, and the multi-layer screens are relatively large. Thin and light, it is closer to the productized form. This method has fully restored the number of virtual object points, and the depth of field is limited by the number of restored object points. The light field VR researched by Stanford University uses a two-layer LCD screen + lens structure, which can Achieve the effect of focusing on the foreground and the background blur, and focusing on the background and foreground blur, indicating that a true light field display is realized.

Figure 5. Principle diagram of the reduction angle method

Figure 6. Factors affecting the angular resolution of the restored angle laminated screen structure

Figure 7. Verification experiment 1 of reduction angle method

Figure 8. Verification experiment 2 of reduction angle method

Figure 9. Schematic diagram of the principle of the reduction point method

Figure 10. Stanford University reduction object point method to achieve true light field VR

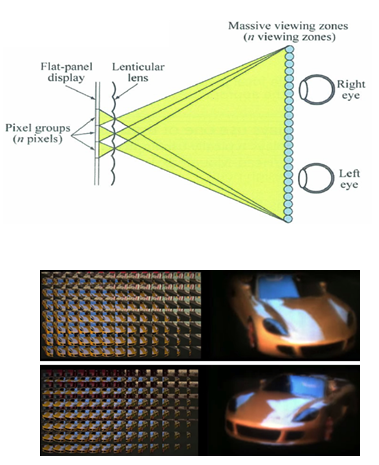

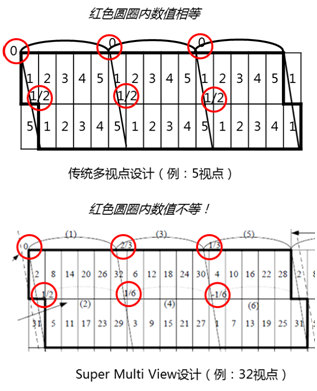

Super Multi View (SMV) light field technology still adopts the Lenticular structure, and the design has more viewpoints interspersed with the original viewpoint, the grating is inclined, and the lens pitch is a non-integer multiple of the sub-pixel width; the left edge of the sub-pixel at the starting point of each lens unit The distances to the 3D grating are not equal, resulting in different light deflection positions of the sub-pixels in the same position of each lens unit; as shown in the figure, the values in the red circle are compared, and the traditional multi-view design is 0, 0, 0, 1/2, 1/2, 1/2, the value is the same, so the sub-pixel light of 1 viewpoint of each lens unit completely overlaps; and the new multi-view design is 0, 2/3, 1/3, 1/2, 1/6, -1 /6, the value is different, so the sub-pixel light of the viewpoint at the starting position of each lens unit cannot be completely overlapped, resulting in multiple viewpoints. The values in the red circle in the figure are different, which can expand more viewpoints, horizontal and vertical Either way, although the human eye is always located in the crosstalk zone of adjacent viewpoints, the parallax of the adjacent viewpoints is reduced and subdivided, so the influence is small, and the farther apart viewpoints are, the greater the influence. This method also has the advantages of smooth parallax and less resolution loss, but the disadvantage is that the crosstalk is large. This technology is suitable for TVs and larger-sized products that require large viewing angles and long-distance viewing.

Figure 11. Super Multi View light field design features

Figure 12. Comparison of light field VR technologies

True light field display technology, from the point of view of productization, the use of multi-layer screens to restore object points is limited by the number of screen layers, and the degree of light field restoration of the restoration angle method is higher. Among them, the structure of microlens array is the easiest at present Realize the light field effect, but the number of sub-pixels covered under a single lens is as many as hundreds, so the resolution is greatly reduced. Because the entrance pupil light is required to be collimated, the 3D Eye box is small and can be moved to see the stereo effect The scope is very narrow and cannot be commercialized yet. Under this technical background, Super Multi View (SMV) light field technology is a compromise solution, which can realize multi-directional information with multiple viewpoints. Although it cannot eliminate the visual convergence conflict (VAC) of the human eye, it is weakened. , To meet the naked eye three-dimensional demand for advertising machines and exhibitions in the market.

references

[1]Dr. Nikhil Balram , “ Light field imaging and display systems”, Display Week, 2017

[2]N. Bedard, T. Shope, A. Hoberman, M. A. Haralam, N. Shaikh, J. Kovacevic, N. Bairam, I. Tosic, "Light field otoscope design for 3D in vivo imaging of middle ear", Biomed Opt. Express, Jan. 2017

[3] N. Balram, I. Tosic, H. Binnamangalam, "Digital health in the age of the infinite network", Journal APSIPA, 2016

[4] L. Meng, K. Berkner, "Parallax rectification for spectrally-coded plenoptic cameras'', IEEE-ICIP, 2015

[5] N. Bedard, I. Tosic, L Meng, A. Haberman, J Kovacevic, K. Berkner, "In vivo ear imaging with a light field otoscope'', Bio-Optics: Design and Application, April 2015

[6] K. Akeley, '' Light-field imaging approaches commercial viability'', Information Display 6/15, Nov./Dec. 2015

[7] I. Tosic, K. Berkner, "3D Keypoint Detection by Light Field Scale-Depth Space Analysis," ICIP, October 2014 (Best Paper Award)

[8] N. Bedard, I. Tosic, L Meng, K. Berkner, "Light field Otoscope," OSA Imaging and Applied Optics, July 2014

[9] J. Park, I. Tosic, K. Berkner, "Systern identification using random calibration patterns," ICIP, October 2014

[10]M. S. Banks, W. W. Sprague, J. Schmoll, J. A. Q. Parnell, G.D. Love, "Why do animal eyes have pupils of different shapes", Sci. Adv. August 2015

[11]S. Wanner. S. Meister, B. Goldluecke, "Datasets and benchmarks for densely sampled 4D light fields", Vision, Modeling & Visualization, The Eurographics Association, 2013

[12]R. T. Held, E. Cooper, J. F. O'Brien, M. S. Banks, "Using blur to affect perceived distance and size", ACM Trans. Graph. 29, 2, March 2010

[13]D. Hoffman, A. Girshick, K. Akeley, M. S Banks, "Vergence-accommodation conflicts hinder visual performance and cause visual fatigue", Journal of Vision 8(3):33, 2008

[14]M. S. Banks, et. al., "Conflicting focus cues in stereoscopic displays", Information Display, July 2008

[15]Levoy and Hanrahan, "Light field rendering", SIGGRAPH 1996

[16]E. H. Adelson, J. Y. A. Wang, ''Single lens stereo with a plenoptic camera", IEEE Trans. PAMI, Feb. 1992

[17]E. H. Adelson, J. R. Bergen, "The plenoptic function and the elements of early vision", Computational Models of Visual Proc., MIT Press, 1991

[18]F. C. Huang, K. Chen, G. Wetzstein. "The light field stereoscope: immersive computer graphics via factored near-eye light field displays with focus cues'', SIGGRAPH 2015

[19] G. Wetzstein, "Why people should care about light-field displays", Information Display 2/15

[20] N. Balram, W. Wu, K. Berkner, I. Tosic, "Mobile information gateway - enabling true mobility'', The 14th International Meeting on Information Display (IMID 2014 DIGEST), Aug. 2014. Invited paper

[21]N. Balram, "The next wave of 3D - light field displays", Guest Editorial, Information Display, Nov/Dec 2014 issue, Vol. 30, Number 6

[22] X. Liu, H. Li, "The progress of light field 3-D displays", Information Display, Nov/Dec 2014 issue, Vol. 30. Number 6. Invited paper

[23] W. Wu, K. Berkner, I. Tosic, N. Balram, "Personal near-eye light field display", Information Display, Nov/Dec 2014 issue, Vol. 30, Number 6. Invited paper

[24] W. Wu, N. Balram, I. Tosic; K. Berkner, "System design considerations for personal light field displays for the mobile information gateway", International Workshop on Display (IDW), Dec. 2014. Invited paper

[25]X. Hu, H. Hua, "Design and assessment of a depth-fused multi-focal plane display prototype", J. of Display Tech. 2014

[26] H. Hua, B. Javidi, ''A 3D integral imaging optical see-through head-mounted display", Optics Express, 2014

[27]F. C. Huang, G. Wetzstein, B. Barsky, R. Rasker, "Eyeglasses-free display: towards correcting visual aberrations with cornputational fight field displays", SIGGRAPH 2014

[28]G. Wetzstein, D. Lanman, M. Hirsch, R. Raskar, "Tensor displays• compressive light field synthesis using multi-layer displays with directional backlighting", SIGGRAPH 2012

[29]S. Ravikumar, K. Akeley, M. S. Banks, "Creating effective focus cues in multi-plane 3D displays", Optics Express, Oct. 2011

[30]Y. Takaki, K. Tanaka, J. Nakamura, "Super multi-view display with a lower resolution flat-panel display", Opt. Express, 19, 5 (Feb) 2011

[31]B. T. Schowengerdt, E. J. Seibel, "3D volumetric scanned light display with multiple fiber optics light sources", IDW 2010

[32]Y. Takaki, "High-density directional display for generating natural three-dimensional images", Proc. IEEE 94, 3, 2006

[33] Yu Xunbo, "Key Technologies of High-resolution, Dense Viewpoint 3D Display", PhD thesis, 2016