Image Segmentation Technology based on Neural Network - Dr. Yao Wei

2021-11-16

From autonomous driving to medical diagnosis, image segmentation is everywhere. Image segmentation is one of the indispensable tasks in computer vision. This task is more complex than other visual tasks because it requires low-level spatial information. Image segmentation can be basically divided into two types: semantic segmentation and instance segmentation. A combined version of these two basic tasks is called panorama segmentation. In recent years, the success of deep convolutional neural networks (CNN) has greatly influenced the field of segmentation and provided us with various successful models to date. The following will review the evolution of cnN-based semantic and instance segmentation efforts.

1. Introduction

We live in an era of artificial intelligence (AI), and advances in deep learning are driving the rapid spread of AI [1]. In different deep learning models, convolutional neural network (CNN) [2] has shown excellent performance in different advanced computer vision tasks such as image classification [3] and target detection [4]. Although the emergence and success of AlexNet[3] shifted the field of computer vision from traditional machine learning algorithms to CNN. But CNN is not a new concept. It began with Hubel and Wiese's discovery that there are simple and complex neurons in the primary visual cortex, and that visual processing always starts with simple structures, such as directional edges. Inspired by this idea, David Marr gave us the next insight: vision is layered. Kunihiko Fukushima, inspired by Hubel and Wiesel, constructed a multilayer neural network called Neocognitron from simple and complex neurons. It can recognize patterns in images and has spatial invariance. In 1989, Yann LeCun transformed the theoretical idea of new cognition into a practical concept called Lenet-5. Lenet-5 was the first CNN developed to recognize handwritten numbers. LeCun et al. trained his CNN with backpropagation algorithms. The invention of Lenet-5 paved the way for CNN's continued success in a variety of advanced computer vision tasks and inspired researchers to explore the network's capabilities in pixel-level classification problems such as image segmentation. Compared with traditional machine learning methods, the main advantage of CNN is the ability to learn appropriate feature representations for the problem at hand in an end-to-end manner, rather than using manually constructed features that require domain expertise [5]. Image segmentation is widely used. From autonomous vehicles to medical diagnosis, image segmentation tasks are everywhere.

Background 2.

2.1. Why convolutional neural Network?

Image segmentation is the focus of various image segmentation tasks we mentioned. Over the years, different researchers have used traditional machine learning algorithms to solve this task, with the help of various techniques such as thresholds, region growth, edge detection, clustering, superpixels, etc. [6]. Most successful work is based on hand-made machine learning features. First, feature engineering requires domain expertise, and the success of these machine learning-based models has slowed at a time when deep learning is beginning to take over the computer vision world. In order to achieve excellent performance, deep learning requires only data and does not require any traditional handmade feature engineering techniques. At the same time, traditional machine learning algorithms cannot self-adjust to incorrect predictions. Deep learning, on the other hand, has the ability to self-adjust based on predicted results. Among the different deep learning algorithms, CNN has achieved great success in different fields of computer vision and preempted the field of image segmentation.

2.2 Image segmentation

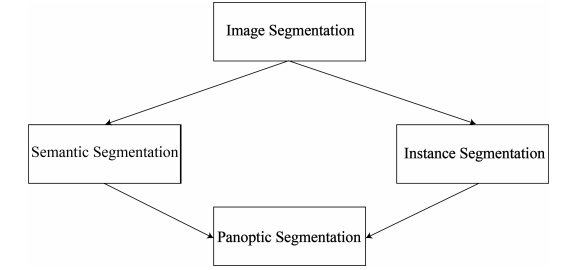

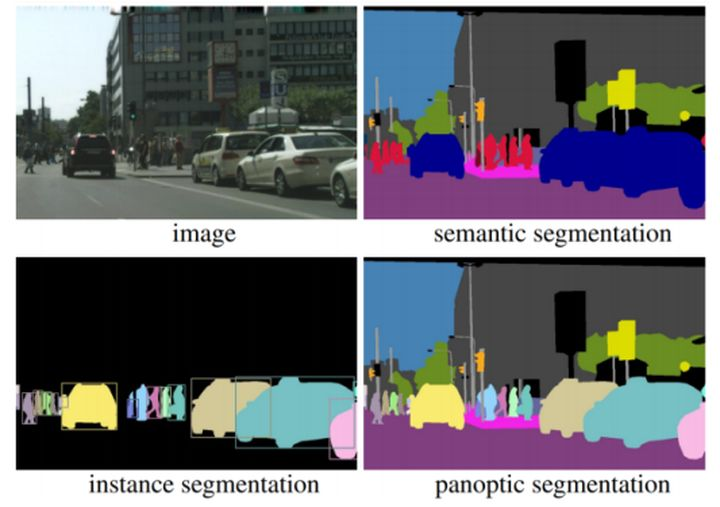

In computer vision, image segmentation is to segment a digital image into multiple regions according to the different properties of pixels. Unlike classification and object detection, it is usually a low-level or pixel-level visual task because the spatial information of an image is important to semantically segment different regions. The purpose of segmentation is to extract meaningful information for easy analysis. In this case, image pixels are labeled in such a way that each pixel in the image shares specific features such as color, intensity, texture, and so on. There are two types of image segmentation: semantic segmentation and instance segmentation. In addition, there is another type called panoramic segmentation [7], which is a unified version of the two basic segmentation processes. Figure 1 shows the different types of segmentation, and Figure 2 shows the same example. In the following part, we discuss in detail the research status of different image segmentation technologies based on CNN.

图1. 不同类型的图像分割

Figure 2. Examples of different types of image segmentation

In addition, CNN has been successfully applied to video object segmentation. Caelles et al. first used full convolutional networks to segment single-shot video objects. Miao et al., on the other hand, use cnN-based semantic segmentation network and the proposed Memory aggregation network (MA-NET) to deal with interactive video object segmentation. Other researchers use the SEMANTIC segmentation network based on CNN as the basic network for collaborative video object segmentation of forest-background integration. These are some CNN-based video segmentation models with the latest results obtained on various video segmentation datasets.

3. Semantic segmentation

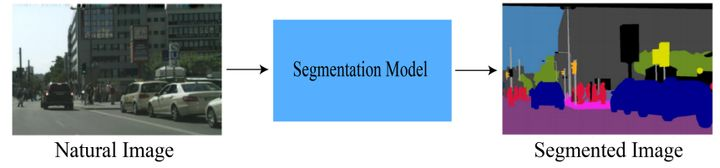

Semantic segmentation describes the process of associating each pixel of an image with a class label [8]. Figure 3 shows a semantically segmented black box view. After the success of AlexNet in 2012, various successful SEMANTIC segmentation models based on CNN were obtained. In this part, the development of semantic segmentation model based on CNN will be introduced.

Figure 3. The process of semantic segmentation.

The application of CNN in semantic segmentation model has great diversity. R-cnn firstly extracts regional suggestions using selective search algorithm, and then applies CNN to PASCAL VOC semantic segmentation. R-cnn achieved record results on second-order pooling, which was the leading artificial semantic segmentation system at the time. Meanwhile, Gupta et al. used CNN and geometric center embedding in RGB-D images for semantic segmentation.

Among the different semantic segmentation models based on CNN, full convolutional network (FCN) has gained the most attention, and the trend of semantic segmentation models based on FCN appears. In order to retain the spatial information of images, the model based on FCN removes the fully connected layer of traditional CNN. In addition, some researchers use context features and achieve the most advanced representation, and some research on interactive image segmentation using full convolution dual-flow fusion network.

Chen et al. combined the Atrous algorithm and conditional random field in semantic segmentation and proposed DeepLab. Later, the "Atrous Special Pyramid Pool" (ASPP) was added to DeepLabv2. DeepLabv3 goes a step further and uses cascading deep ASPP modules to merge multiple contexts. All three versions of DeepLab have had good results.

Ronneberger et al. used a U-shaped network called U-Net, which has a contracting and expanding path to accomplish semantic segmentation. Contraction path is a traditional convolutional network, which extracts feature graphs and reduces spatial information. The extended path convolved the contracted feature graph with the input. Recently, u-NET with multi-resolution blocks has been applied to multi-mode biomedical image segmentation in some literatures, and better segmentation results have been achieved than classical U-NET.

Liu et al. fused the essence of the global average pool and L2 normalization layer in FCN architecture and proposed ParseNet to implement the latest results of various data sets. Zhao et al. proposed the pyramid Scene Parsing Network (PSPNet). They used the pyramid pool module on the final extracted feature map to merge global context information for better segmentation. Peng et al. make full use of the advantages of local features and global features by using the global convolution idea of large kernel functions. Pyramid Concern Network (PAN), ParseNet, PSPNet and GCN utilize global context information with local characteristics for better segmentation.

Researchers from the field of deep learning also use CNN for semantic segmentation. Luo et al. also used CNN as a generator and discriminator in an adversarial network, and proposed a "class-level consulting network" (CLAN). Other researchers have proposed IBAN and SIBAN. In another study, the authors used the "Macro-micro-adversary Network" based on CNN for human syntactic analysis.

Some researchers have applied CNN model to attention-based image segmentation. Wang et al. used a non-local Neural Network. Huang et al. used DeepLab to extract feature images, and then input feature images into the circular cross attention module for semantic segmentation. In another study, the global attention network is used to aggregate the long range context information in the convolutional feature graph to solve the scene resolution problem.

Generally speaking, CNN has greatly influenced the field of segmentation and promoted the research and development of semantic segmentation and instance segmentation in image segmentation.

reference

[1] Y. LeCun, Y. Bengio, G. Hinton, Deep learning, Nature, 521 (7553) (2015)436.

[2] J. Wu, Introduction to convolutional neural networks, 2017, National Key Lab for Novel Software Technology. Nanjing University. China, 5, 23.

[3] A. Krizhevsky, I. Sutskever, G.E. Hinton, Imagenet classification with deep convolutional neural networks, Adv. Neural INF.process. Syst., 25 (2012) 1097 -- 1105.

[4] S. Zhang, L. Wen, X. Bian, Z. Lei, S.Z. Li, Single-shot refinement neural network for object detection, in: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 4203 -- 4212.

[5] J. Gu, Z. Wang, J. Kuen, L. Ma, A. Shahroudy, B. Shuai, T. Liu, X. Wang, G. Wang, J. Cai, T. Chen, Recent Advances in convolutional Neural Networks, Pattern Recognit, 77 (2018) 354-377.

[6] X. Xie, G. Xie, X. Xu, L. Cui, J. Ren, Automatic image segmentation with superpixels and image-level labels, IEEE Access, 7 (2019) 10999-11009.

[7] D. Zhang, Y. Song, D. Liu, H. Jia, S. Liu, Y. Xia, H. Huang, W. Cai, Panoptic segmentation with an end-to-end cell r-CNN for pathology image analysis, in: International Conference on Medical Image Computing and Computer-assisted Intervention, Springer, 2018, pp. 237 -- 244.

[8] Y. Wei, X. Liang, Y. Chen, Z. Jie, Y. Xiao, Y. Zhao, S. Yan, Learning to segment with image-level annotations, Pattern Recognit., 59 (2016) 234 -- 244.