Overview of holographic near-eye display technology from a human-centered perspective - Dr. Xin Zhang

2021-11-16

Holographic projection technology can show objects or scenes in a very real and three-dimensional situation, so it is widely used in various industries and various occasions. Wearable near-eye displays for virtual and augmented reality (VR/AR) have been growing rapidly in recent years. While researchers are developing a number of techniques to create realistic three-dimensional objects, they lack an understanding of the role of human perception in guiding hardware development. The final VR/AR headset must integrate the display, sensors, and processor into a compact case that people can comfortably wear for a long time, while allowing superior immersion and user-friendly human-computer interaction. Compared with other 3D displays, holographic displays have unique advantages in providing natural depth cues and correcting eye aberrations. Therefore, it is expected to become the realization technology of the next generation of VR/AR devices [1].

1. Infrastructure

Infrastructure construction, network conditions have been available, cloud AR will accelerate the popularity of the industry. The high bandwidth and low latency features of 5G and WiFi6 are suitable for CARRYING VR/AR services, which will enrich the usage scenarios mainly featuring uHD streaming media. Meanwhile, wireless terminal devices will greatly improve user experience and further support device applications in outdoor scenarios. Cloud AR service based on cloud computing can effectively solve the pain points of high cost and high weight of equipment that is not suitable for wearing, and promote industry popularization and content development [2].

2. Technical review

Near-eye display is the platform for virtual reality (VR) and augmented reality (AR), which is expected to revolutionize healthcare, communications, entertainment, education, manufacturing and other fields. The ideal near-eye display must be able to provide high-resolution images in a wide field of view (FOV) while supporting adjustment cues and a large eye frame with a compact shape. However, due to various trade-offs between resolution, depth cues, FOV, eye frame, and shape factors, we are still quite far away from this goal. So easing these trade-offs opens up an interesting way to develop the next generation of near-eye displays.

The key requirement of near-eye display systems is to present a natural three-dimensional (3D) image for a realistic and comfortable viewing experience. Early technologies, such as binocular displays [3], provided 3D vision through stereoscopic vision. Although this type of display is widely used in commercial products, such as SONY PlayStation VR and Oculus, there is vergence-accommodation conflict (VAC), i.e., mismatch between eye convergence distance and focal length [4]. Causes visual fatigue and discomfort. The pursuit of correct focusing cues is the driving force of current near-eye display. Representative adaptive support technologies include multi-focus display, light field display and holographic display. To reproduce 3D images, multifocal/zoom displays use spatial or temporal multiplexing to create multiple focal planes [5], while light-field displays use microlenses [6-8], Liquid Crystal displays (LCDs) [7], or mirror scanners [8] to modulate light angles. Although both techniques have the advantage of using incoherent light, they are based on ray optics, a model that provides only rough wavefront control, limiting their ability to generate accurate and natural focusing cues.

In contrast, holographic displays encode and reproduce wavefront by modulating light with diffractive optical elements, thus achieving pixel-level focusing control, aberration correction and visual correction [9]. Holographic displays encode wavefronts generated by 3d objects into computer-generated hologram (CGH) by digital holographic superposition. Optical reconstruction of objects can be achieved by displaying CGH on spatial light Modulator (SLM) and then illuminated with coherent light source. Based on diffraction, holographic displays provide more freedom to manipulate wavefront than multifocal/zoom and light field displays, resulting in more flexible control adjustment and depth range.

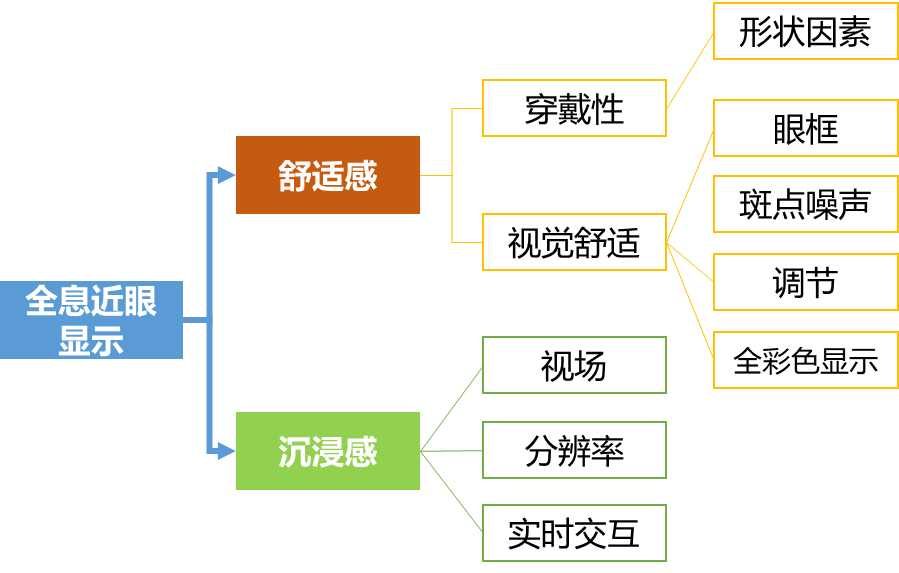

Figure 1 categorizes holographic near-eye displays from a human-centered perspective

From the viewer's point of view, the ultimate experience has two characteristics: comfort and immersion. Comfort is related to wearability, vestibular sensation, visual perception, and social ability, while immersion involves field of view, resolution, gesture, and tactile interaction. To provide such an experience, hardware must be designed around the human visual system. Therefore, from the human-centered perspective, holographic near-eye display can be divided into two categories, as shown in Figure 1.

3. Human visual system

The human eye is a naturally evolved optical imaging system. Light enters the eye through an adjustable iris that controls how much light is transmitted. After refraction by the cornea and lens, light forms an image on the retina, where photoreceptors convert light signals into electrical signals. These electrical signals then undergo important signal processing in the retina (for example, being suppressed by horizontal cells) before entering the brain. Finally, the brain interprets the image and perceives the object in 3D based on multiple cues.

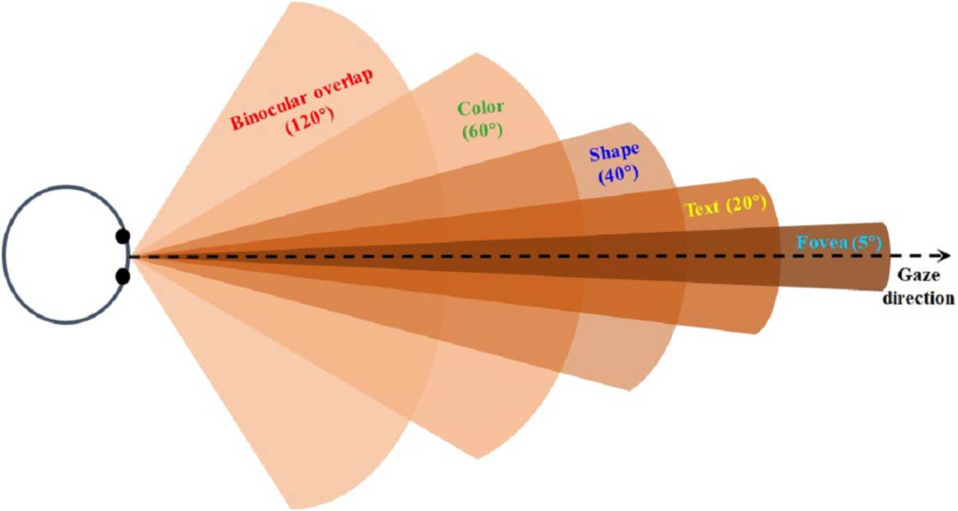

Figure 2. Field of view of human eyes

Figure 2 shows the field of view of human eyes. Knowledge of the human eye is essential for human-centered optimization and provides a foundation for hardware design. To maximize comfort and immersion, we must build displays that best fit the optical structure of the human visual system.

4. Holographic near-eye display: comfort

A. Wearability

The shape of the equipment determines its wear resistance. The ideal near-eye display must be as light and compact as regular eyeglasses and can be comfortably worn all day. Compared with other technologies, holographic near-eye display technology has the advantage of simple optics, and its key components are only composed of coherent light source and SLM. SLM is essentially a pixelated display that can adjust the amplitude or phase of incident light by superimposing a CGH on the wavefront.

B. Visual comfort

(1) Eye frame

In lENSless hologram near-eye display, the active display region of SLM determines the exit pupil of the system, and thus determines the eye box.

(2) speckled noise

Due to the requirement of coherence, holographic display usually uses laser as light source. However, using a laser produces speckle noise, which is a pattern of grainy intensity superimposed on an image. In order to suppress speckle noise, three strategies can be used: superposition, spatial coherent construction and temporal coherent destruction.

(3) adjustment

By calculating CGH based on physical light propagation and interference, the tuning cues in holographic display can be solved accurately. In physics-based CGH calculations, virtual 3D objects are represented by point emitters or polygon-mapped wavefront numbers. Both models typically require dense point clouds or grid sampling to reproduce continuous and smooth depth cues. Although there are many ways to accelerate the calculation of CGH from point clouds or polygon-based 3D models, such physics-based CGH faces challenges in processing massive amounts of data in real time.

(4) Full color display

Because CGH is sensitive to wavelength, displaying color is a challenge for holographic near-eye displays. There are two methods for full color representation: time segmentation and space segmentation.

5. Holographic near-eye display: immersion

A. the view

Field of view is defined as the range of angles or distances that an object spans. In holographic display, SLM(loaded Fresnel hologram or Fourier hologram) is generally located at the exit pupil of the display system, so the diffraction Angle of SLM determines the maximum size of the holographic image, thus determining the field of view of the system.

B. Resolution and fixation rendering

Most commercial near-eye displays currently have an angular resolution of 10-15 pixels per degree (PPD), compared to about 60 PPD for normal adult vision. Pixelated images jeopardize the immersive experience. The central vision resolution (~± 5O) is improved by using the concave display technique, while the peripheral regions are mapped with fewer pixels. When combined with eye tracking, concave displays can provide a large field of view and higher perceptual resolution. A common way to do this is to use multiple display panels to create images of different resolutions.

C. Real-time interaction

(1) Gesture and tactile interaction

Human-computer interaction is an essential part of enhancing the immersive experience. In virtual reality systems, when users experience virtual objects in the display environment, gesture or tactile feedback is widely used to provide a more realistic feeling to simulate physical interaction process.

(2) Real-time GCH calculation

For current holographic near-eye displays, CGHs is usually computed offline due to the huge 3D data involved. For real-time interaction, fast CGH calculations are key and can be achieved through fast algorithms and hardware improvements.

6. Conclusion

Demand for AR/VR devices has been growing. The ability to create comfortable, immersive viewing experiences is critical to moving this technology from lab-based research into the consumer market. Holographic near-eye displays are uniquely positioned to address this unmet need by providing precise pixel-by-pixel focusing control. This article reviews the progress in this field with a human focus, hoping that such a perspective will stimulate new thinking and arouse awareness of the role of the human visual system in guiding future hardware design.

Reference Catalogue:

[1] Chang C , Bang K , Wetzstein G , et al. Toward the next-generation VR/AR optics: a review of holographic near-eye displays from a human-centric perspective[J]. Optica, 2020, 7(11):1563.

[2] VRAR hardware industry research report: the increasing maturity of the industry chain, industry outbreak of. https://www.vzkoo.com/doc/37802.html?a=1&keyword=AI%E7%A1%AC%E4%BB%B6

[3] Y. Wang, W. Liu, X. Meng, H. Fu, D. Zhang, Y. Kang, R. Feng, Z. Wei, X. Zhu, and G. Jiang, "Development of an Immersive virtual reality headmounted display with high performance," Appl. Opt. 55, 6969 -- 6977 (2016).

[4] E. Peli, "Real Vision & Virtual Reality," opt. Photon. News 6(7), 28 (1995).

[5] W. Cui and L. Gao, "All-passive Transformable optical Mapping with Near-eye Display," Sci. Rep. 9, 6064 (2019).

[6] D. Lanman and D. Luebke, "Near-eye Light Field Displays," ACM Trans.Graph. 32, 220 (2013).

[7] F. c. Huang, K. Chen, and G. Wetzstein, "The Light Field stereoscope: Immersive computer graphics via factored near-eye light field displays with Focus cues," ACM Trans. Graph. 34, 60 (2015).

[8] C. Jang, K. Bang, S. Moon, J. Kim, S. Lee, and B. Lee, "Retinal 3D: Augmented reality near-eye display via pear-tracked light field projection on Retina," ACM Trans. Graph. 36, 190 (2017).

[9] K. Wakunami, P.-Y. Hsieh, R. Oi, T. Senoh, H. Sasaki, Y. Ichihashi, M. Okui, Y.-P. Huang, and K. Yamamoto, "Megale-type see-Through Holographic Three-dimensional Display," Nat. Commun. 7, 12954 (2016).