Introduction of Image Recognition Technology based on Convolutional Neural Network - Dr. Yao Wei

2021-11-12

Convolutional Neural network (CNN) model was first proposed by Alexander Waibel in 1987 [1]. When using CNN to deal with the image classification problem with increasing data volume, CNN can make the data better trained through continuous dimensionality reduction. In recent years, CNN has made continuous breakthroughs in many fields, including face recognition, text analysis, emotion description, natural language processing, etc., which has been continuously praised and studied by researchers and scholars in related fields [2].

Compared with ordinary neural networks, they both contain Weights and Biases. The biggest difference between them is that convolutional network has the ability of feature extraction from the input image. In the convolution layer, a neuron is only connected with some neurons and contains several Feature maps. A convolution kernel can be used to convolve all neurons in the same Feature plane. In the training process of the network, reasonable weights will be obtained in the form of random fractions when the convolution kernel is initialized. The pooling layer can also be viewed as a convolution, but a special kind of convolution. In convolutional neural network, the complexity of model can be greatly simplified and model parameters can be optimized by using feature extractor composed of convolutional layer and pooling layer.

1. The convolution layer

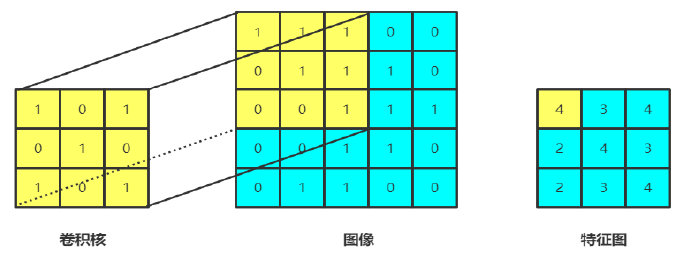

Convolutional layer is the core part of convolutional neural network. The essence of convolution is to extract features from data by using the parameters of convolution kernel and obtain results by matrix dot product and sum operation. The convolutional layer has a powerful feature extraction function [3], and its core idea is local connection and weight sharing. The specific convolution operation is to use a set of convolution kernels to slide on the input feature graph, and then multiply the parameters of the convolution kernels bit by bit with the corresponding pixels of the feature graph to obtain the output feature graph.

Local connections are adopted in the convolution layer during the convolution operation, which greatly reduces the weight number of neurons. The convolution operation process is shown in Figure 1. In the convolution operation, the convolution kernel will be set a certain step length, and then slide in the image according to a certain rule, which is generally from the upper left to the lower right of the image. In this process, the convolution kernel is linearly multiplied with the corresponding region in the image, and then summed. Through the above methods, the convolution layer can extract local features of the input data.

FIG. 1 Convolution process

Another feature of convolution layer is weight sharing. Only a convolution check image is required to carry out convolution to extract a certain kind of features of the image, and in this process, the weight of the convolution kernel remains unchanged.

2. Local connections

In image recognition, the correlation between pixels that are close to each other in spatial dimension is strong, while the correlation between pixels that are far away is weak. Therefore, in the process of convolutional neural network learning, each neuron only needs to perceive the local image, but does not need to perceive the global image. Local connection refers to the partial connection between each neuron and the input image according to the spatial dimension. Compared with the traditional fully connected neural network, the local connections of the convolutional layer can effectively reduce the number of parameters of the model.

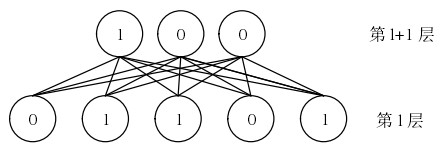

3. Full connection layer

The full connection layer is generally located after the feature graph output by the convolutional neural network. The feature of the full connection layer is that each neuron is connected to all neurons of the upper layer, as shown in Figure 2. After the previous several convolution, pooling and activation function operations, the model will input the feature images generated by learning to the full connection layer. In the fully connected layer, the high-dimensional feature graph can be transformed into one-dimensional vector by linear stretching, which can be used for classification or regression processing in the classifier. In order to improve the performance of the network, a good method is to set up multiple full connection layers in CNN, but this will also greatly increase the parameters in the network.

Figure 2. Full connection

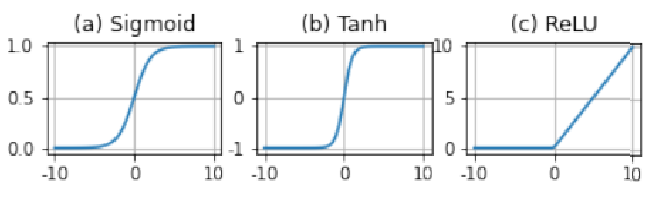

4 Activation Function

If the convolutional neural network is only composed of stacked linear convolution operations, it cannot solve nonlinear problems and is difficult to extract high semantic data information. The activation function is not used in the earlier neural network, which leads to the ordinary matrix multiplication relationship between the upper and lower layers of the network, which makes the neural network unable to learn the features of the data. By using activation functions, the learning ability of the network can be improved. Activation function plays a key role in changing the mathematical relationship between input and output data in neural network. After activation function is added, the output of the previous layer is mapped by activation function to obtain nonlinear function, which can improve the expression ability of the network. Activation function also introduces nonlinear characteristics into deep neural network, which has more powerful expression ability and can solve various nonlinear data problems. Therefore, Activation Function is introduced to add nonlinear factors to improve the expression ability of the whole network. Common activation functions include Tanh function, Sigmoid function, ReLU function, etc., as shown in Figure 3.

Figure 3 common activation functions

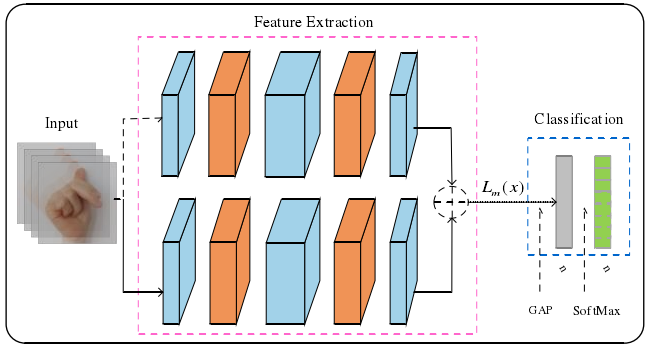

Weighted loss function two-channel feature fusion gesture recognition network

At present, there are still two important challenges in gesture image recognition algorithm: first, the accuracy of gesture recognition is not high due to the high similarity between gesture categories and the existence of self-occlusion between fingers. Another challenge is that the gesture recognition algorithm based on convolutional neural network has a large number of parameters and high requirements on computer hardware, making gesture recognition difficult to be applied to mobile and embedded devices with limited resources. In view of the above two problems, literature [2] designed a two-channel feature fusion gesture recognition network (WDN) with a weighted loss function. On the one hand, the weight of local features of the two channels was balanced by the weighted loss function. On the other hand, the convolution layer replaces the full connection layer to reduce the number of model parameters.

WDN adopts a two-channel weighted loss feature fusion strategy, as shown in Figure 4. The network structure is the same as the two-channel network model. The difference is that WDN uses the weighted loss function to train the overall network structure in order to balance the weights of the local features of the two channels.

Figure 4 WDN network structure [2]

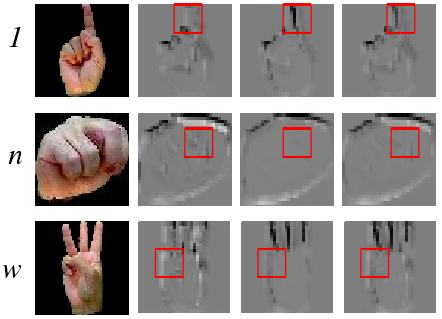

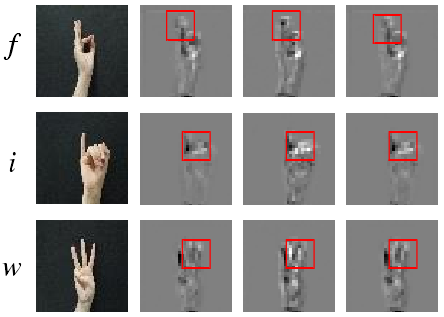

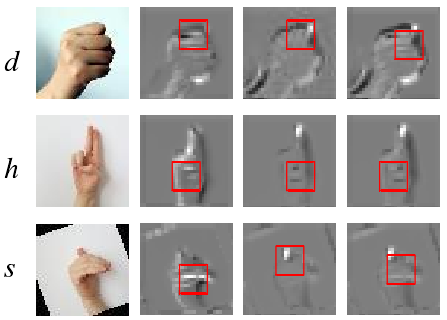

Literature [2] uses the visualization tool Tensorboard to visualize the gesture image feature images extracted by WDN from two benchmark data sets and one self-made data set, clearly showing the gesture image features extracted by the convolutional neural network model in the process of convolution operation, as shown in FIG. 5.

(a)

(b)

(c)

FIG. 5 Visualization of feature map

The experimental results show that the two-channel feature fusion gesture recognition network of weighted loss function designed in reference [2] effectively improves the gesture recognition accuracy, and the number of model parameters is less.

reference

[1] Waibel A, Hanazawa T, Hinton G, et al. Phoneme recognition using time-delay neural networks[J]. IEEE transactions on acoustics, speech, and signal processing, 1989, 37(3): 328-339.

[2] Han Wenjing. Research on Gesture Recognition Method based on Improved Convolutional Neural Network [D]. Guangxi Normal University,2021.

[3] Schwenk H, Barrault L, Conneau A, et al. Very deep convolutional networks for text classification[J]. Association for Computational Linguistics, 2017: 107-1116.