3D Point Cloud Technology Series (4) @0901- Dr. Junchao Yang

2021-11-11

000041. The introduction

As the key technology of future-oriented intelligent manufacturing, robot has the advantages of strong controllability, high flexibility and flexible configuration, and is widely used in parts processing, cooperative handling, object grasping and component assembly. However, most of the traditional robot systems are in the structured environment, through off-line programming to carry out single and repeated operations, has been unable to meet the increasing intelligent needs of people in production and life. With the continuous development of computer technology and sensor technology, we expect to build an intelligent robot system with more sensitive perception system and more intelligent decision-making ability. Robot grasping and placing is the concentrated embodiment of intelligent robot system and has been studied deeply and extensively in industry and academia in recent years.

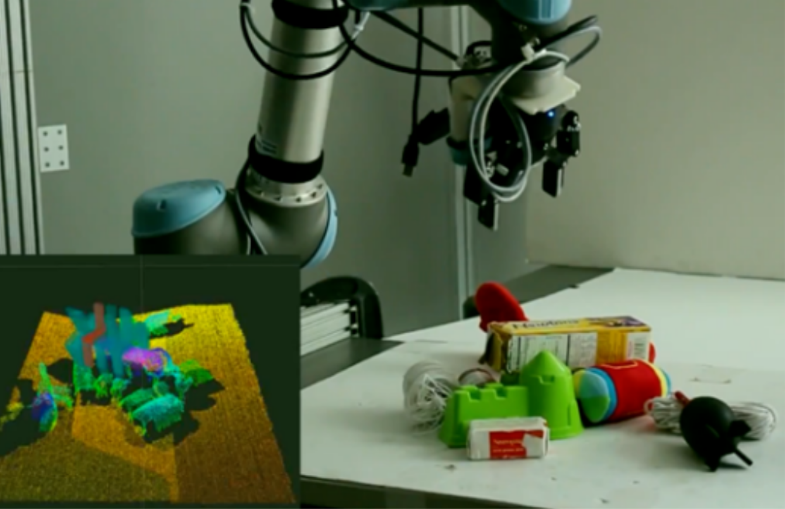

Figure 1. Robot grasping diagram based on 3D point cloud

In recent years, with the rapid development of low-cost depth sensors and lidar, 3d point cloud acquisition is becoming more and more convenient. Compared with planar 2d images, 3d point cloud data has the following advantages :(1) it can express the geometric shape information and spatial position attitude of objects more truly and accurately; (2) it is less affected by the change of light intensity, imaging distance and viewpoint; (3) There is no projection transformation and other problems in two-dimensional images. The above advantages of 3D point cloud data make it expected to overcome many shortcomings of planar 2d images in robot target recognition and grasping, so it has very important research significance and wide application prospect. Therefore, in recent years, the vision research of point cloud and robot grasping based on point cloud have become a new research hotspot in the field of robotics.

000042. Robot Grasping Method based on 3D Point Cloud

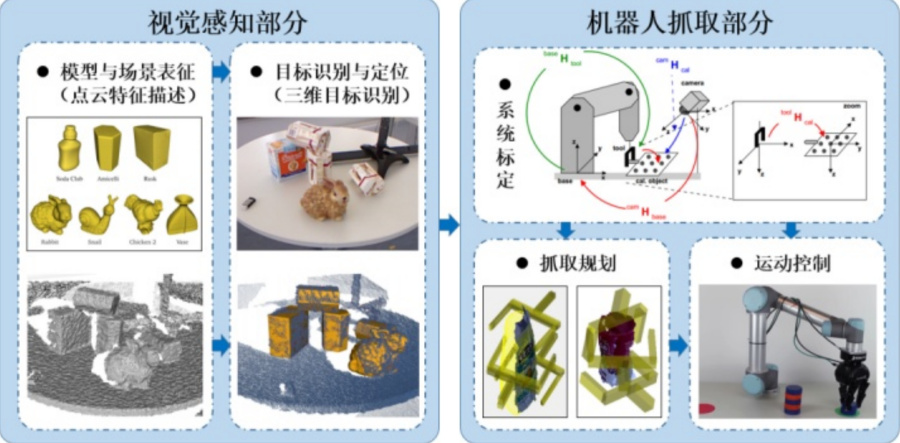

Figure 2. Schematic diagram of robot grasping process based on point cloud

The robot grasping process based on point cloud can be divided into two parts, as shown in Figure 2, which mainly includes visual perception part and robot grasping operation part. The grasping part of robot includes system calibration, grasping planning and motion control.

000042.1 3D target recognition

In the field of robot grasping based on point cloud, the key step is target recognition and localization, that is, 3d target recognition and corresponding pose estimation for grasping model in point cloud scene. The existing 3d object recognition algorithms mainly include local feature based algorithm, voting based algorithm, template matching based algorithm and learning based algorithm.

The target recognition algorithm based on local features mainly includes: key point detection, feature extraction, feature matching, hypothesis generation and hypothesis testing. The combination of key point detection and feature extraction corresponds to model and scene representation. In the field of point clouds, classic key point extraction algorithms include Harries 3D, ISS (Intrinsic Shape Signature) algorithm, and NARF (Normal Aligned Radial Feature) algorithm. Feature extraction is mainly to extract solid local features on the surface of the object. The function of feature matching is to establish a series of feature correspondence of key points. Classic feature matching algorithms include nearest Neighbor ratio (NNDR), threshold method and nearest Neighbor strategy (NN). The hypothesis generation part mainly uses the matched features to find out the possible model positions in the scene, and establishes the corresponding attitude estimation (i.e. calculation transformation hypothesis). The hypothesis testing part is to get the real correct transformation matrix of the potential transformation relation calculated by the hypothesis generation part.

The 3d object recognition algorithm based on voting is an inherent feature of the direct matching model and scene. After generating a limited candidate pose set, the support function and penalty function are constructed using prior conditions, and each pose is voted, and then the optimal transformation matrix is obtained.

The object recognition algorithm based on template matching is mainly for the detection of untextured objects. The existing 3D model is used for projection from different angles to generate 2d RGB-D images and then regenerate them into templates. Then all the templates are matched with the scene to obtain the optimal model pose.

The object recognition algorithm based on learning predicts the identity of the object to be recognized and its position in the coordinate system of the object model by using the regression forest proposed by the algorithm for each pixel of the input image, and establishes the so-called "object coordinate". An optimization model based on random consistency sampling algorithm is used to sample the ternary corresponding point pairs to create a pose hypothesis pool. The hypothetical pose that maximizes the consistency of prediction is selected as the final pose estimation result.

000042.2 Robot grasping Technology based on point Cloud

The grasping part of robot includes system calibration, grasping planning and motion control. System calibration includes mainly camera and robot calibration. Since the position and attitude of the object to be grasped are both in the camera coordinate system, in order to accurately grasp the robot, its coordinates and attitude need to be transformed to the robot coordinate system.

Grasping planning part, its main role is to achieve the extraction of the target object in the scene grasping point, grasping strategy should ensure stability, task compatibility and adaptability to new objects, etc. In addition, grasping quality can be evaluated by the position of the contact point of the object and the configuration of the terminal gripper. At present, there are mainly experience-based methods and end-to-end methods for grasping objects.

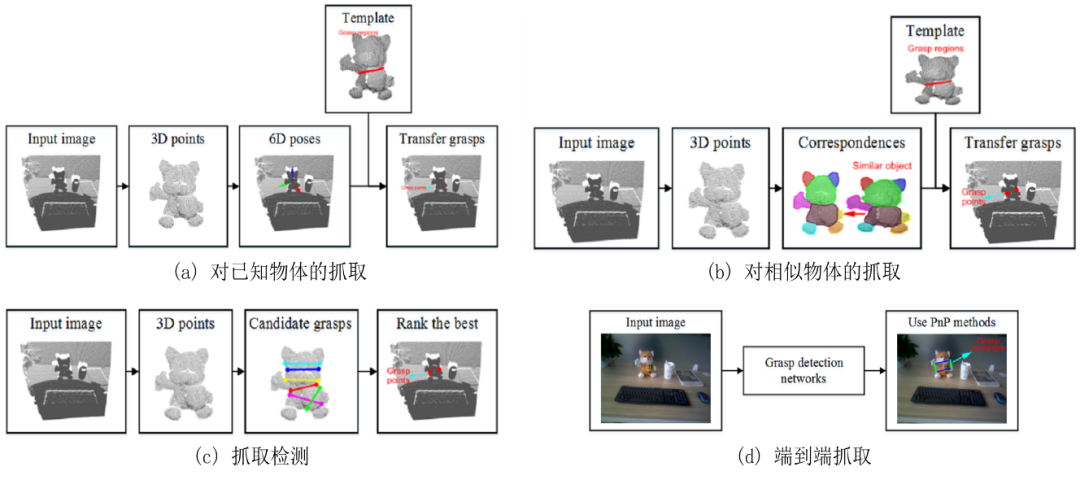

The experience-based approach is to use corresponding algorithms to grasp objects according to specific tasks and geometric shapes. More specifically, it can be divided into the grasping of known objects and similar objects. If the grasping object is a known object, the robot grasping can be carried out by learning the existing successful grasping examples and combining with the specific environment. In fact, if the target object is known, it means that the 3d model of the object and the position of the grab point are also known a priori in the database. In this case, we only need to estimate the 6D pose of the target object from the local view, and refine and fine-tune the pose by ICP and other algorithms, and then we can get the grasping position of the target object. Because of its high grasping accuracy, it is a popular grasping method at present. However, when the 3D model is not accurate, such as the object can not be measured or easily deformed, it will lead to a large grasping bias. In fact, in many cases, the target object captured is not exactly the same as the existing database model, but similar objects of the same class in the model library, which involves the capture of similar objects. After the target object is located, the capture point can be transferred from the similar 3D model in the model library to the current local object by using the key point corresponding algorithm.

End-to-end capture detection directly skips the location of the capture target and extracts the position of the capture point from the input image. In this kind of methods, the sliding window strategy is more commonly used. For the end-to-end grasping detection method, the calculated grasping points may not be globally optimal, because only part of the objects are visible in the image.

FIG. 3 Schematic diagram of different grasping schemes

The motion control part of robot grasping is mainly to design the path of grasping points from the target object of manipulator. The key problem here is motion representation. Although the trajectory from the manipulator to the target grasping point is infinite, many places cannot be achieved due to the limitations of the manipulator. So, trajectories need to be planned.

There are mainly three methods of trajectory planning, which are the traditional DMP based method, imitation learning based method and reinforcement learning based method. Traditional methods consider the dynamics of motion and generate motion primitives. Dynamic Movement Primitives (DMPs) is one of the most popular motion representations and can be used as feedback controllers.

In the process of grasping, due to the limited space and obstacles, the robot will be hindered from approaching the target object. This requires the robot to interact with the environment. In this kind of obstacle-avoiding grasping task, the most common method of trajectory planning is the grasping object-centered modeling algorithm, which separates the target from the environment. This approach works well in structured or semi-structured environments because objects are well separated. There is also an obstruction-centric approach that uses action primitives to synchronously associate multiple objects. In this way, the robot can grasp the target while touching and moving it to clear the desired path.

000043. The conclusion

3D point cloud has the geometric structure and depth information of the surrounding environment, which makes the current point cloud-based methods become the mainstream in 3D perception and achieves the perception effect of SoTA. In this paper, the robot grasping technology based on 3D point cloud is summarized and summarized. In the follow-up, other classic 3D point cloud processing methods and their applications in the industry will be analyzed and elaborated in detail.