Brief Description of the technical route for VR Head Display Optics - Dr Du of Fan

2021-11-11

This article reviews the evolution of VR headset hardware architectures (sensors and cells), display architectures, and emerging optical systems architectures over the past six years. These three technological evolutions form the backbone of an exciting VR hardware roadmap that will make headsets smaller, lighter, with larger FOV and higher resolution, ultimately providing a more comfortable user experience and opening up new application areas beyond existing games. This allows such hardware optimizations to penetrate the enterprise and consumer productivity markets for VR headsets, as opposed to today's VR headsets, which are largely supported by the consumer gaming market and the AR market, which is largely supported by the enterprise market.

1 Hardware Architecture Evolution

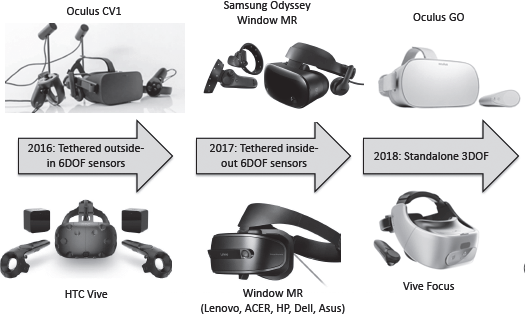

Before reviewing the optical roadmap for VR headsets, we'll review the overall hardware architecture roadmap for VR headsets (see Figure 1). Most of the original VR headsets (such as the early FakeSpace Wide 5 and the later Oculus DK1 / DK2) were tied to high-end computers and had outward-in user-facing cameras for head tracking, followed by more sophisticated external cameras, As in Oculus CV1 and HTC Vive, the camera and sensors are bundled to a PC configured with a specific GPU.

Figure 1 VR hardware architecture roadmap 2016 -- 2019: From PC strap-on helmets with external in sensors to standalone devices with external in sensors.

These sensors must be fixed in the spatial position specified by the VR. Windows MR headsets as well as third-party manufacturers such as Samsung Odyssey, Acer, Lenovo, HP, and Dell offered a true 6DOF into-external sensor experience in 2017, but still bundled with a high GPU PC. Then came cheaper, simpler standalone 3DOF versions with IMU sensors, such as Oculus Go, in 2018.

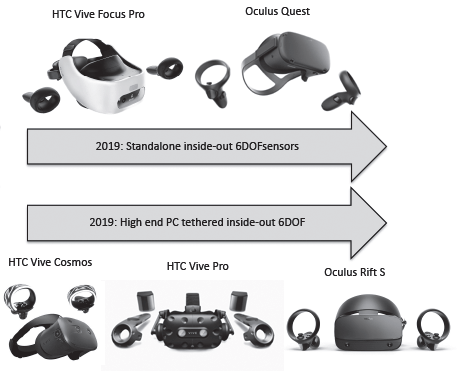

At the same time, the industry is moving toward standalone VR headsets with 6DOF inward and outward sensors (e.g. HTC Vive Focus or Oculus Quest) or internal and external high performance PC/ high-end VR headsets (e.g. Oculu Rift S or HTC Vive Pro S). The lower-priced HTC Vive Cosmos (spring 2019) is connected via A PC, has an inside-out 6DOF sensor, can be modular, and has a reversible headset, a cool feature for VR headsets (also adopted by some others, for example as an HP).

What's remarkable is how quickly sensors have evolved from outside in to inside out, from cameras in the early days (as in Oculus DK1 and DK2) to clunky spatial positioning systems like HTC Vive and Oculus CV1, To slim all-in-ones with inside-out positioning, such as today's Oculus Quest and HTC Vive Focus Pro.

Given that the initial hardware work on VR headsets began in the early 1990s (over a quarter century), we are witnessing rapid architectural evolution, which is only possible if the tuyere cycle continues.

2 Display technology evolution

The latest VR boom has benefited from the overwhelming dominance and low-cost availability of smartphone displays such as low power consumption, fast computing mobile chips, WiFi/Bluetooth connectivity, IMU, front and rear cameras, and depth map sensors.

Earlier VR headsets were designed a decade before the introduction of smartphone technology, based on more exotic display architectures (perhaps better suited to immersive displays), such as the 1D LED mirror scanner in the 1995 Virtual Boy headsets.

Between the two VR booms of the early 2000s and early 2010s, most of the current "video player headsets" or "video smart glasses" for VR headsets were based on low-cost LCoS and DLP microdisplays, developed specifically for DSLR camera electronic viewfinders and microprojectors (including EMS microprojector optical engines). Starting in the mid-2000s, the aggressive smartphone market pushed the LCD industry to produce high-ppi LTPS LCD panels, then IPS LCD panels, and finally AMOLED panels.

They appear at low cost and high resolution of up to 800 DPI, recently passing 1250 DPI. In 2017, Google and LG Display displayed a 4.3-inch 16 Mp (3840×2×4000) OLED Display on a glass panel for VR Display with a resolution of 1443 DPI (17.6 micron pixels), brightness of 150-cd/m2 and duty cycle of 20%. The contrast is up to 15000:1, the color depth is 10 bits, and the operating frequency is 120 Hz. However, to fill a typical 160-degree (h) × 150-degree (V) human field of view with a resolution of 1arcmin requires 9,600 × 9,600 pixels per eye. Thus, fixation rendering is a "required" feature that can reduce the pixel count without compromising high resolution perception.

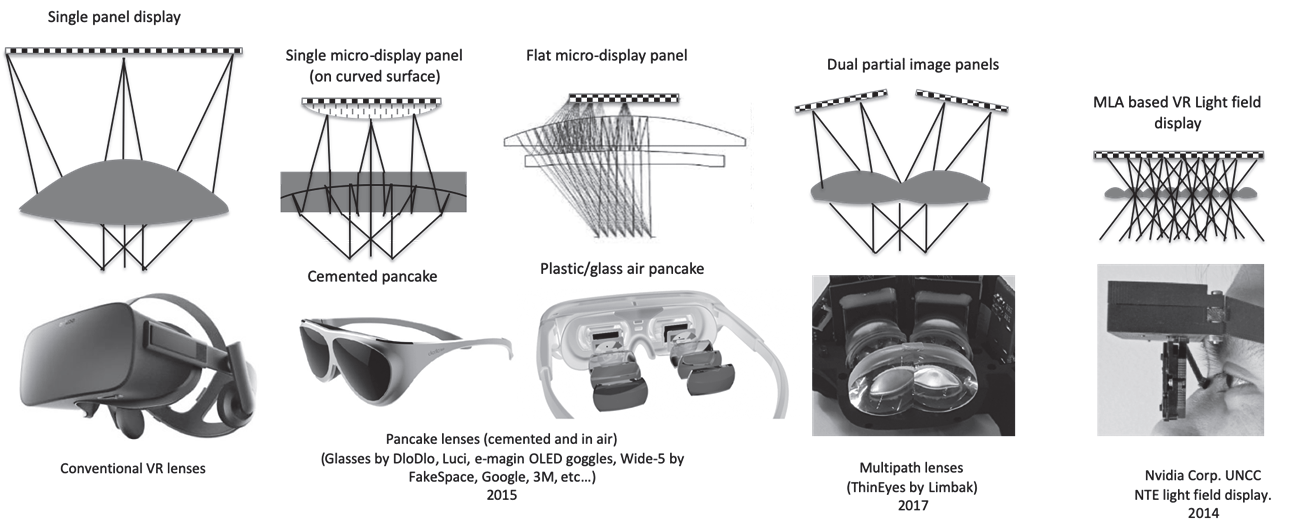

HTPS LCDS and micro-OLED microdisplays on Si backplanes have been used in VR headset receivers, but with next-generation optical devices such as pancake lenses or multipath lenses, and even MLA arrays (see below).

3 evolution of optical technology

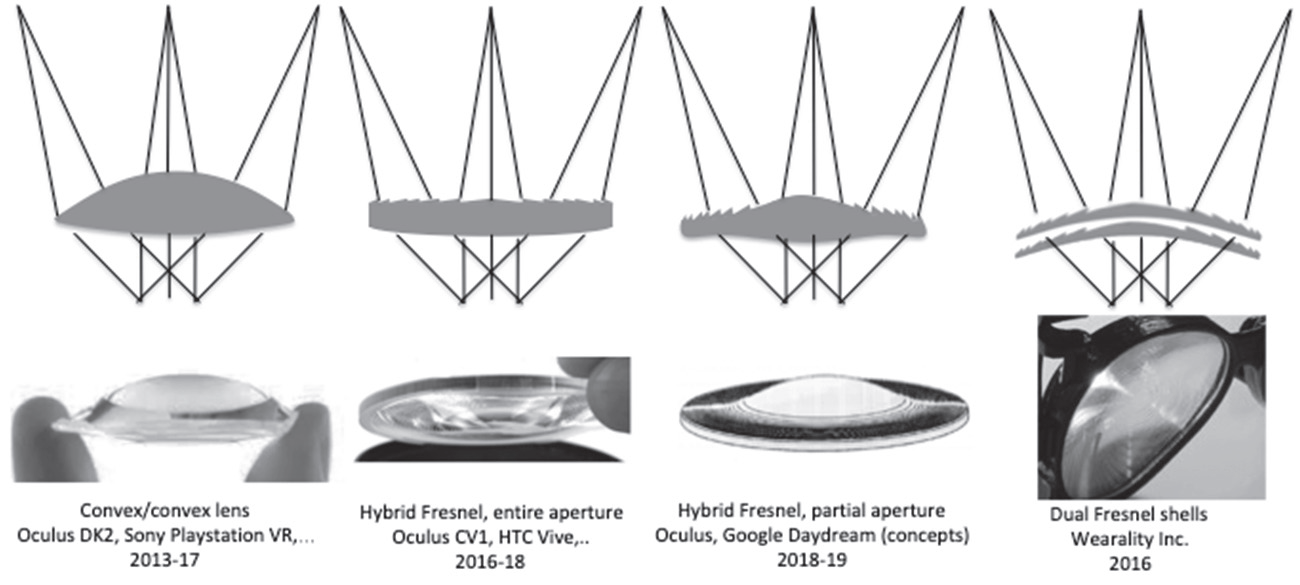

Conventional refracting lenses (such as Oculus DK1 and DK2, and the lenses in Sony PlayStation VR) are limited by their incident Angle, weight, and size, which limits their focal length of light and thus the distance between the display and the optical elements (in turn, The size of the HMD and the weight and position of the center of gravity (CG) of the head display. Some early headsets (such as the DK1) were equipped with a variety of interchangeable lenses that could be adapted to different needs.

Figure 2. Continuous VR lens configurations are designed to increase FOV and reduce weight and size.

Over the past few years, most VR HMD have used hybrid Fresnel lenses, such as in the HTC Vive to Vive Pro, most Windows MR headsets, from the CV1 to Quest and Rift S, and many others (see Figure 2). Fresnel lenses are much thinner in size, but Fresnel lenses give a Fresnel earring effect (glare), especially at large field angles.

Such mixed lenses can be refracted Fresnel or diffraction Fresnel. A hybrid Fresnel diffraction lens on a curved surface can provide effective compensation for the transverse dispersion (LCS) of achromatic single peaks. Recent lens designs have attempted to give pure refraction to the central region, which faces toward the edge of the lens as a hybrid Fresnel (figure 2, center right). This reduces the total thickness without changing the high resolution area of the central region for lower angles. Alternative Fresnel refractive concepts, such as one in Wearality (far right of Figure 2), attempt to increase FOV considerably without increasing display size and significantly reduce lens weight and thickness. However, this lens has a more severe Fresnel band ring ghost across the entire field of view.

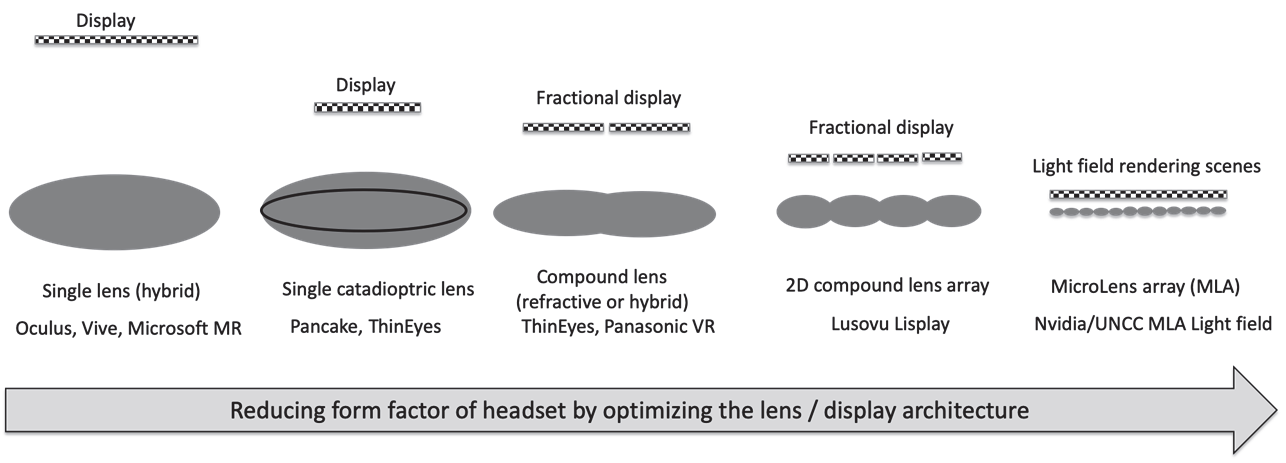

Reducing the weight and size of the lenses is one aspect of wearable comfort. Reducing the distance between the lens and the display is also desirable, as it improves the overall shape and pushes the center of gravity further back to the head for greater wear comfort. Reducing the distance between the lens and the display requires increasing the focal length of the lens (corresponding to a reduction in focal length). Strong simple refraction or Fresnel lens can affect the overall efficiency and MTF. Therefore, other lens configurations, or compound lens configurations, have been investigated to provide other options. Figure 3 shows some of these composite lenses, namely polarized pancakes, multipath composite lenses and MLA -- compared to conventional lenses.

The composite VR lens configuration is designed to shorten the distance between the display and the lens, thereby reducing the size of the head display.

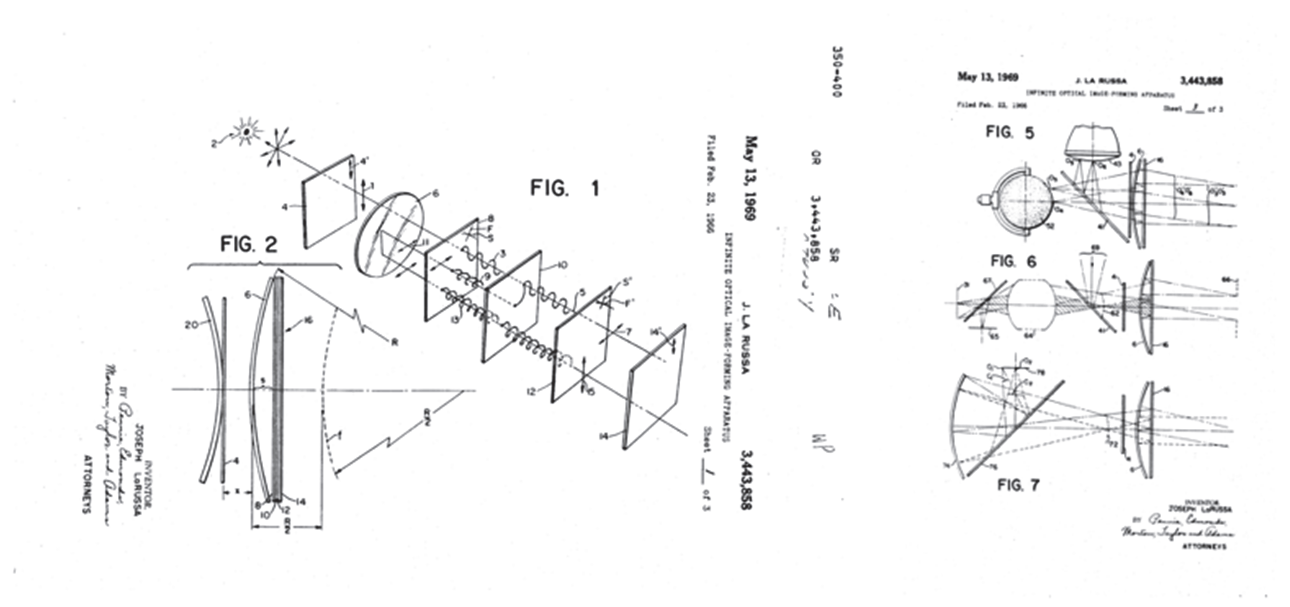

Figure 4: The first polarized optical architecture (La USA, May 1969), both a direct VR view (left) (origin of the concept of a pancake VR lens) and an AR combinator (right).

Since 1969, studies have been carried out on pancake polarized lenses to increase the power of the lenses without increasing their size or weight. An aerial architecture in direct view VR mode or AR mode has been proposed (see Figure 4). Today, most pancake designs are glued or use double lenses rather than one side.

Polarizing optical architectures (such as pancake lens configurations) present new challenges, such as polarizing ghosting management and process development to achieve low birefringence plastics (cast and thermal annealing rather than injection molding), all at a cost at the consumer level (see VR) DloDlo's glasses, China). The curved display plane improves the quality of the pancake lens display (as in the E-Magin OLED VR prototype through the use of dense polished convex glass fiber-board). It is worth noting that there are now optical material suppliers that reduce the cost and weight of the original optical materials. There are some other interesting shot stack concepts, They are suitable for small VR headsets (e.g. Luci (Santa Clara, CA), a Division of HT Holding, Beijing), using 4K Mu-OLED microdisplay panels (3147 PPI) and four separate optically coated lenses for each eye.

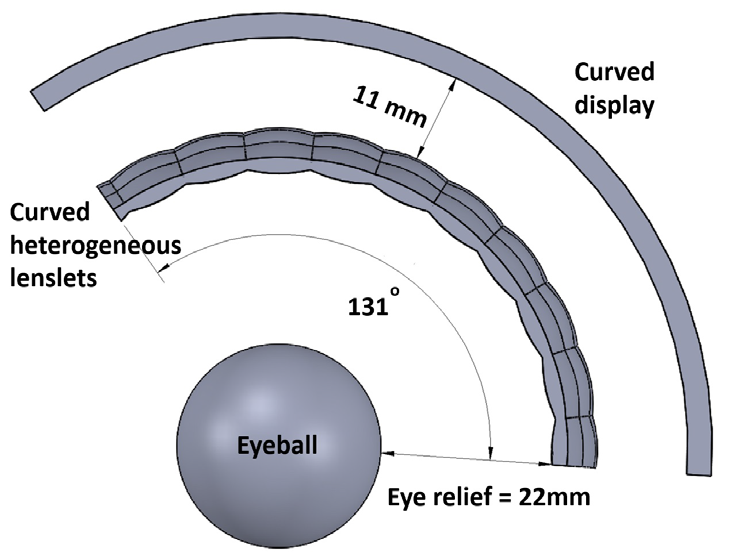

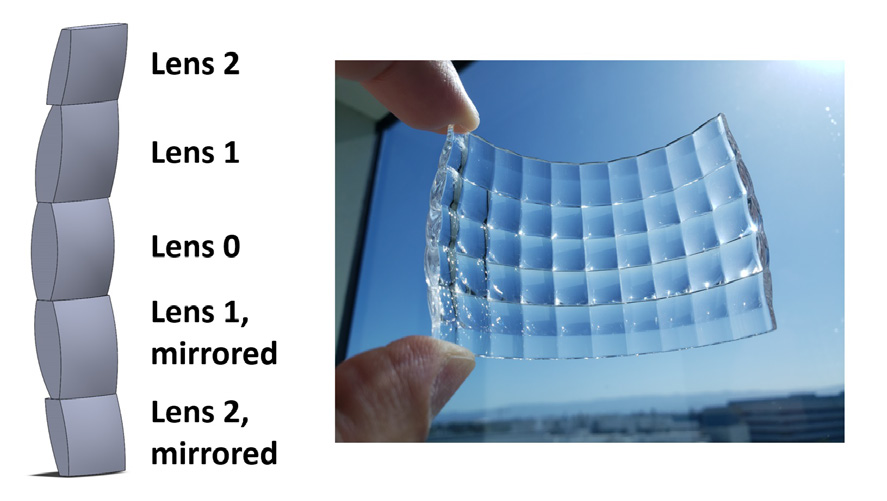

Other interesting optical alternatives have recently been investigated, such as multipath lenses, which provide a smaller profile size and maintain high resolution at FOV. The concept is somewhat similar to the MLA-based display of light fields (proposed by Gabriel Lippmann, 1908 Nobel Prize winner of Integral Imaging). However, in this case, the MLA array is reduced to two or four lenses. It uses multiple separate displays (two, the third case in Figure 3), each displaying a local image, which is then fused as the eye approaches optimal Eye Relief. When extended to use a large number of lenses such as those in MLA, the architecture approaches Lusovu (Portuguese) Lisplay architecture or Nvidia NTE Light Field VR display architecture (far right of Figure 3). Ronald Azuma's group demonstrated that the ThinVR method can provide 180 degree horizontal FOV in headmounted VR displays at a compact form factor by replacing traditional large optical devices with curved microlens arrays of customized heterogeneous small lenses and placing them in front of curved displays. At the same time, two key parameters in VR display are solved: volume and limited FOV. Figure 5 shows a prototype of ThinVR and a photo of the VR effect in the prototype. Figure 6 shows a scale diagram of the MLA and the right-eye display (left) as well as a physical photo of the MLA, and Figure 7 shows a comparison of the size of the ThinVR and the small π 8K optical engine.

Figure 5. ThinVR prototype. Right: Photo taken in the actual ThinVR prototype, covering the entire FOV of one eye (level about 130°)

Figure 6. Left: scale diagram of cylindrical small lens and right eye display, right: photograph of manufactured small lens array.

Figure 7: Reduced volume: Compare the core optical elements and display components of the ThinVR prototype (bottom) and Pimax (top).

3. Summary

This article describes various novel VR lens configurations that reduce lens size and weight in order to increase FOV or reduce overall size by reducing the distance between the lens and the display, or both. This lens configuration can also be used with some modifications in AR and perspective MR systems.

Figure 8 summarizes the continuous processes from a single lens to a single composite lens to a composite lens array to a microlens array and their implementation in a product or prototype. Many VR and AR companies are working on various combinations of these architectures.

Figure 8 lens architecture continuum from a single lens to an MLA array, with an example implementation in VR.

reference

[1] www.kessleroptics.com/wp-content/pdfs/Optics-of-Near-toEye-Displays.pdf (slide 15)

[2] www.dlodlo.com/en/v1-summary

[3] B. Narasimhan, Ultra-compact pancake Optics based on ThinEyes super-resolution Technology for Virtual Reality headsets, Proc. SPIE 10676, 106761G (2018).

[4] D. Grabovičkić et al., "Super-resolution Optics for Virtual Reality," Proc. SPIE 10335, 103350G (2017).

[5] Joshua Ratcliff, Alexey Supikov, Ronald Azuma et al., "ThinVR: Heterogeneous Microlens Arrays for Compact, 180 degree FOV VR Near-eye displays," IEEE TRANSACTIONS ON VISUALIZATION AND COMPUTER GRAPHICS,VOL. 26, NO. 5, MAY 2020

[6] B. Kress, "Architectures for Augmented Reality Headsets," Press SPIE, [2020]