3D Point Cloud Technology Series (3) - Dr. Junchao Yang

2021-11-09

000041. The introduction

In autonomous driving, where multiple sensors are used to sense the environment, lidar has attracted the attention of autonomous driving researchers because it can provide a 3D accurate scene directly. Waymo was founded by Google in 2009 to commercialise previously mature technology; In 2015, Uber Advanced Technologies Group carried out research with the purpose that autonomous driving could be fully incorporated into driving services. So far, companies like Apple, Baidu, Didi, Lyft, Tesla, Zoox, and others have been working on autonomous driving. The application of 3D point cloud in the field of autonomous driving can be divided into the following two aspects: 1) real-time environment perception and processing based on scene understanding and target detection; 2) Generation and construction of high-precision maps and city models based on reliable positioning and reference.

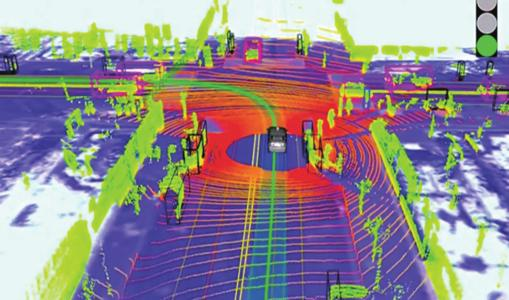

Figure 1. Automatic driving diagram based on 3D point cloud

Automatic driving System based on 3D Point Cloud

000042.1 3D Point Cloud processing technology in automatic driving System

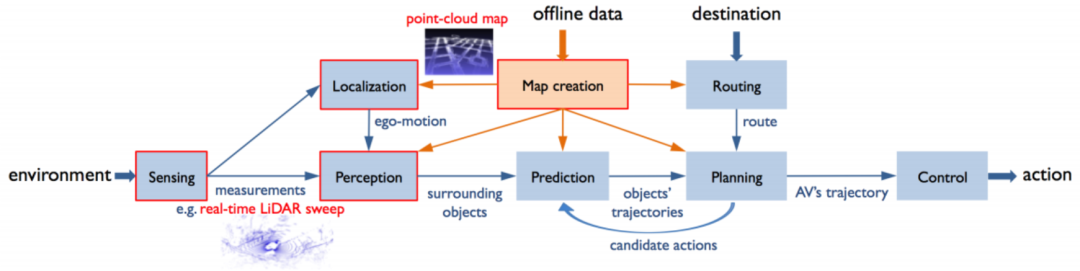

Two point clouds are commonly used in autonomous vehicles: Real-time scanning lidar Sweep and Point-cloud Map; The point Cloud map displays the primary environment information of point cloud. The map is used for (1) the positioning module obtains its own positioning information with the map as a reference in point cloud registration. (2) The perception module divides the foreground and background through the Point Cloud map. Lidar Sweep information is the point cloud information registered with Point Cloud in the positioning module and used for sensing module to detect the surrounding targets.

Figure 2. Schematic diagram of automatic driving system

• Real - time LiDAR sweeps

According to the liDAR scanning mechanism, we can get the corresponding laser beam and corresponding time stamp of each point. Therefore, point cloud can also be defined as a two-dimensional image according to x axis (time stamp) and Y axis (laser beam). If so, we can regard each sweep as a regular point cloud. For example, each laser beam in a 64-line point cloud will be fired thousands of times per second, so we can get a series of 3D points associated with 64 azimuth angles. But for the point cloud, the upper and lower laser positions are not synchronized, and will suffer from the problem of distance. So LiDAR sweeps includes properties such as placing points on a two-dimensional lattice that are not perfectly neat because they are not synchronized. Unlike ordinary point clouds obtained from multiple angles, this point cloud contains only one particular Angle; Objects in front of them block the point clouds behind them; Does not provide very detailed shape information for distant objects and this is the 3D data type used in KITTI target detection, which usually contains information about the reflective intensity of points.

• Point -- cloud maps

Based on the sweeps collection of real-time LiDAR sweeps that are actually obtained from multiple unmanned vehicles at the same time, the sweep is consolidated so that a detailed 3D shape can be obtained. Disorder; Multi-view fusion can make the object occlusion problem disappear. More detailed information and more complete geometric structure will get more accurate semantic information. General point-cloud maps do not contain Point intensity information, but have the normal vector information of Point cloud.

000042.2 Automatic driving System Positioning Technology based on 3D Point Cloud

In the autonomous driving scenario, the requirement of high precision indicates that the translation error should be in the centimeter level, and the rotation Angle error should be in the micro radian level, which is very important. At the same time, it should have good robustness to ensure that it can be used in different autonomous driving scenarios.

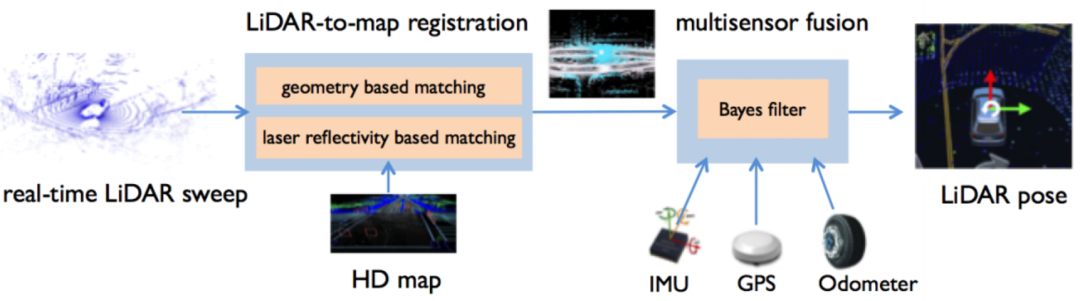

Figure 3. Schematic diagram of positioning module of automatic driving system

As shown in Figure 3, the standard positioning module consists of two core components: point cloud-map registration and multi-sensor fusion technology. Among them, the point cloud-map collocation criterion is to obtain the initial pose through real-time point cloud sweep and HD Map registration. Subsequently, multi-sensor fusion is adopted to further improve the confidence and robustness of pose. The multi-sensor fusion component is based on measurements of multiple sensors, including IMU, GPS, odometer, camera, and the initial pose shown in the previous liar to Map.

The standard method of multi-sensor fusion is to use Bayesian filtering, such as Kalman filter, extended Kalman filter or particle filter. Bayesian filters consider an iterative approach based on vehicle motion dynamics and multi-sensor readings to predict and correct lidar attitude and other states. In autonomous driving, the states tracked and estimated by Bayes filter usually include motion-related states such as attitude, speed and acceleration, as well as sensor-related states such as IMU deviation.

Bayes filters work in two iterative steps: prediction and correction. In the prediction step, in the gap between the sensor readings, the Bayes filter predicts the state according to the vehicle motion dynamics and the hypothetical sensor model; In the calibration step, when a sensor reading or pose measurement is received, the Bayesian filter corrects the state according to the corresponding observation model.

000043. The conclusion

3D point cloud has the geometric structure and depth information of the surrounding environment, which makes the current point cloud-based methods become the mainstream in 3D perception and achieves the perception effect of SoTA. In this paper, the key technologies of automatic driving based on 3D point cloud are summarized and summarized. In the follow-up, other classic 3D point cloud processing methods and their applications in the industry will be analyzed and elaborated in detail.