Medical image Fusion Technology based on neural Network - Dr. Yao Wei

2021-11-09

To improve the utilization rate of medical image information and enhance the accuracy, single mode under the mode of medical image information usually collected tissues or organs under different modes of medical image, and then select the appropriate algorithm or network structure, the use of image fusion technology, the various single mode under the mode of medical image contains valid information as much as possible together, Finally, a fusion image rich in information is generated. In recent years, medical image fusion technology has gradually developed into a research focus of medical image processing [1]. In general, image fusion needs to follow three principles [2] : First, the fused image should not lose the important feature point information in the original image; Second, the fused image can only contain information about the influence of the original image and the device. Thirdly, invalid information or interference information affecting the fusion quality should be removed from the fused image as much as possible.

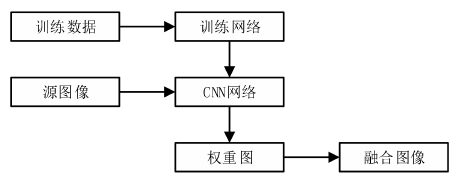

In recent ten years, with the development of deep learning, image fusion technology based on deep learning has made great progress. In the field of medical image fusion, compared with traditional algorithm fusion technology, image fusion technology based on deep learning shows advantages in feature extraction and image recognition. Deep learning models can represent medical images, process curved shapes and provide high-quality details. The strong feature learning ability of deep learning is beneficial to the fusion effect in the feature extraction of medical image fusion. The fusion weight is usually derived from the spatial changes of some visual features between different images, such as pixel variance, color saturation and contrast, etc., to adapt to the content at different positions in the image according to different image features and prioritize them [3]. The feature of the decomposed image and the weight distribution of pixels will affect the fusion result. Liu et al. [4] acquired and allocated optimal weights in the most appropriate way through the learning of network parameters of convolutional neural network (CNN). The process of CNN image fusion is shown in Figure 1. CNN network can use the original image to generate weight graph and output the results of normalized weight distribution of neurons.

Figure 1 Image fusion process based on CNN

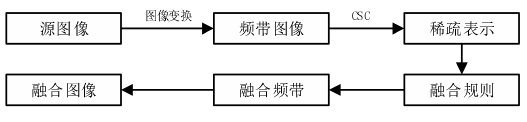

The first step in the process of medical image fusion is multi-scale transformation, and the result of transformation has an important influence on the subsequent feature extraction and the formulation of fusion rules. Therefore, obtaining an appropriate transform through deep learning is the key point in the process of medical image fusion. In view of the information loss caused by the low output dimension of CNN, the method based on Hek is used to initialize the convolution kernel outside the first layer. The first layer is composed of sub-images parsed by Gaussian Lalaplace filter and Gaussian filter, and the image is decomposed and reconstructed adaptively through a high frequency component and a low frequency component during the fusion process. SR fusion method [5] based on sparse representation has two disadvantages: limited retention ability of detail feature information and sensitivity to position deviation. Therefore, Liu et al. [6] proposed an image fusion method based on CSR, which analyzed the original image into the basic layer and detail layer for multi-mode fusion, and the results showed that the fusion effect was significantly better than the original image fusion method. Figure 2 shows the image fusion process based on CSR. First, a specific image transformation is performed on the source image. CSC is then performed on the selected transform band through a set of offline learning dictionary filters. Different image transformation and fusion methods are selected according to specific fusion problems, so that the final fusion effect can be affected by generating training samples and setting relevant learning parameters in the learning stage.

Figure 2 Image fusion process based on CSR

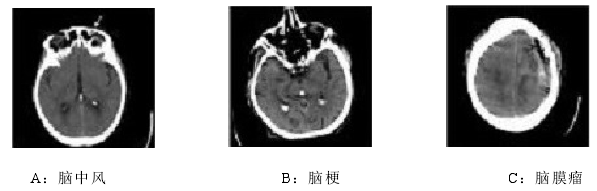

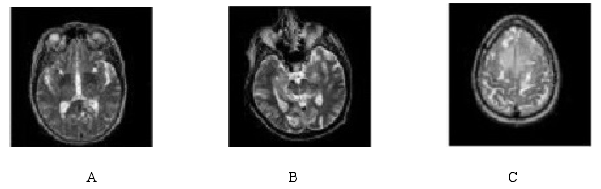

Literature [7] built a medical image fusion algorithm based on Laplace pyramid combined with convolutional Neural network (LPCNN). In order to improve the fusion performance, the source image is decomposed by The Laplace pyramid, and the CNN network is improved by using the support vector machine idea, so that it does not rely on the empirical initialization parameters, and the image features are effectively extracted to generate the optimal weight graph W. The loss of image information is reduced by removing the pooling layer and sampling layer of THE CNN network. In literature [7], CT and MR images of some brain diseases were selected from the whole brain map data set of Harvard Medical School, and combined with the characteristics of the two images, the fusion information was more abundant, the relevant characteristics were more significant, and the basis of clinical diagnosis was more accurate. The GRAY scale of the CT image is used to represent the human tissues and organs in the cross-sectional plane. The darker the gray scale is, the lower the absorption degree of the human tissues or organs is, and the brighter the gray scale is, the higher the absorption degree of the rays is, mainly concentrated in the bones and other parts with relatively high density. MR(Magnetic Resonance) is a Magnetic Resonance imaging technology, whose principle is to obtain electromagnetic signals by means of Resonance between Magnetic field and human body, and to construct human body information on medical images. Figure 3-4 shows CT and MR images of some brain diseases, and Figure 5 is the result of fusion of Figure 3 and Figure 4 in reference [7].

Figure 3 CT images of brain diseases in the three groups

Figure 4 MR images of three groups of brain diseases

FIG. 5 Fusion effect diagram of the algorithm in literature [7]

The experimental results show that the fusion result of the algorithm in literature [7] is good, the image definition is high, the information protection degree of soft tissue in MR source image is prominent, and the fusion effect is good.

[1] Kai-jian Xia, Hong-sheng Yin, Jiang-qiang Wang. A novel improved deep convolutional neural network model for medical image fusion[J]. Cluster Computing, 2019, 22 (1) : 1515-1527.

[2] Haithem Hermessi, Olfa Mourali, Ezzeddine Zagrouba. Convolutional neural network-based multimodal image fusion via similarity learning in the shearlet Neural Computing and Applications, 2018,30(7):2029-2045.

[3] Xue Zhanqi, WANG Yuanjun. Progress in multi-mode medical image fusion based on deep learning [J]. Chinese journal of medical physics, 2020,37(05):579-583.

[4] LIU Y, CHEN X, PENG H, et al. Multiple-focus image fusion with a deep convolutional neural network[J]. Inf Fusion, 2017, 4 (4) : 191-207.

[5] Wang Lifang, Dong Xia, Qin Pinle, Gao Yuan. Multi-modal image fusion based on adaptive joint dictionary learning [J]. Journal of computer applications, 2018,38(04):1134-1140.

[6] LIU Y, CHEN X, WARD R K, et al. Image fusion with convolutional sparse representation[J]. IEEE Signal Process Lett, 2016, 23 (12) : 1882-1886.

[7] Yao XU. Research on medical image fusion algorithm based on deep convolutional neural network [D]. Harbin University of Science and Technology,2021.