Human-computer interaction technology in virtual reality - human pose estimation technology - Dr. Peng Fulai

2021-11-09

1. The introduction

The interactivity of virtual reality is one of the three characteristics of virtual reality. It refers to the interaction between users and virtual world objects generated by computers through interactive devices in a portable and natural way, and a more natural and harmonious human-computer environment is established through two-way perception between users and virtual environment. This paper introduces virtual reality interaction technology based on human pose estimation.

Figure 1. Human pose estimation

2. Human posture estimation technology

Human pose estimation techniques can be roughly divided into two categories: traditional methods and deep learning based methods. The traditional method is to design 2D human body part detector based on graph structure and deformation part model, establish the connectivity of each part using graph model, and estimate human body pose by optimizing the graph structure model based on the relative constraints of human kinematics. Although the traditional method has high time efficiency, it cannot make full use of the image information because the extracted features are mainly artificial feature information, resulting in the algorithm is limited by the different appearance, Angle of view, occlusion and inherent geometric fuzziness in the image. The human pose estimation technology based on deep learning has been studied deeply in the industry recently, and has achieved high recognition accuracy. It is the most mainstream method at present. This paper mainly introduces human pose estimation technology based on deep learning.

Human pose estimation based on deep learning can be divided into three categories: coordinate regression method, thermal regression method and coordinate regression method represented by thermal diagram.

2.1 Method based on coordinate regression

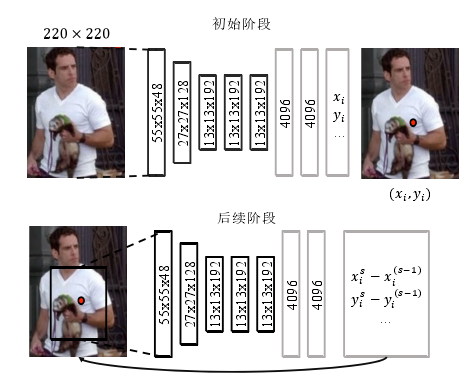

In the method based on coordinate regression, Deep Pose network is mainly used, which has laid the foundation for coordinate regression method and derived many practical data preprocessing and network training methods. Deep Pose, as the first method for human Pose estimation based on Deep learning, adopts the idea of multi-stage regression to design CNN, and takes coordinates as the optimization target to directly regression the two-dimensional coordinates of human skeleton joints. In the initial stage, the approximate position of the joint is obtained, and then the coordinate of the joint is optimized continuously in the subsequent stages. In order to get higher accuracy, before enter the next phase of the regression, the author to get the key points of the current coordinates as the center, around the neighborhood for small size of the sub image as input of this phase return, for the network to provide more joint point of image details with revised its coordinates, specific algorithm flow chart is shown in figure 2.

Figure 2. Deep Pose network architecture

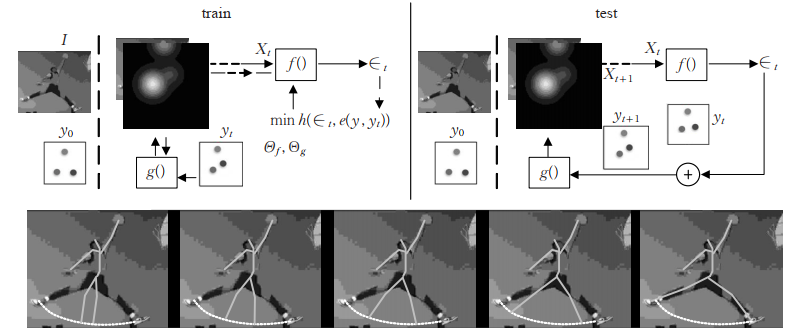

In order to improve human pose estimation based on coordinate regression, the Iterative Error Feedback (IEF) is proposed. In this method, the coordinates of the junction are converted into a thermal map and the thermal map representing the position of the junction and the human body image are simultaneously input into the network of the regression offset of the junction. After several iterations, the position of the junction is gradually regressed. The multi-stage regression of IEF adopts a new strategy, which is not similar to Deep Pose and other methods to conduct a batch (EPOCH) end-to-end training in multiple stages. Instead, the training is divided into four stages, and three complete epoch iterations are carried out in each stage. Therefore, IEF does not conduct gradient descent for the total coordinate error in each stage, but takes part of the total error as the iterative target in this stage, which is equivalent to carrying out stepby step regression and feeding the iteration results of part of the error back to the network. If the direct regression method enables the model to learn the ability to approximate the long range coordinates, then stepwise regression can train the network to approximate the short range coordinates more accurately. During the test, IEF can approach the actual value by three stages of stepwise regression. The specific algorithm flow chart is shown in Figure 3

Figure 3. Schematic diagram of IEF algorithm

2.2 Method based on thermogram regression

The complexity of learning mapping relationship in human pose estimation is increased due to different human body scales, different shooting angles, complex lighting conditions, arbitrary occlusion and other problems. Compared with coordinate regression, thermal regression can retain more information in the image, so most of the mainstream human pose estimation methods are based on thermal regression. This section summarizes the human pose estimation method based on thermal diagram regression from three aspects: introduction of prior knowledge, improvement of network architecture, and modeling of relationships between nodes.

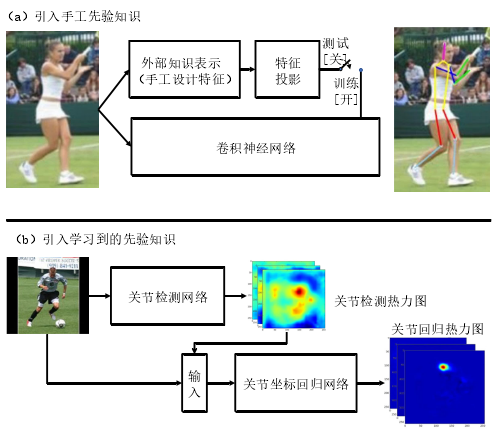

2.2.1 Introduction of prior knowledge

The introduction of prior knowledge mainly includes manual prior knowledge and learned prior knowledge, as shown in Figure 4. Introducing manual prior knowledge is mainly the traditional hough transform characteristic and direction of the gradient histogram as a priori knowledge is introduced into the convolutional neural network, and using a gating mechanism used to control was introduced into the network during training knowledge, thus ensuring to model geometric restrictions and image description information at the same time retain the performance of the model. Another method to introduce prior knowledge is to introduce tasks related to human pose estimation. By taking the thermal map generated by human body detection task and the original image as the input of the network, the network can be guided to learn the location of human body nodes by the thermal map, thus reducing the difficulty of learning the location of human body nodes.

Figure 4. Introduction of prior knowledge

2.2.2 Improvement of network structure

The improvement of human pose estimation network architecture can be divided into four aspects: multi-scale feature extraction, improvement of backbone model, introduction of attention mechanism and relationship modeling of nodes.

Multi-scale feature extraction: Multi-scale feature extraction includes three typical methods: the introduction of pyramid residual module, the use of cascading feature extraction module and the fusion of low-resolution feature and high-resolution feature. Multi-scale feature extraction based on the residual module of the pyramid, is designed on the basis of the original residual module, residual error characteristics is divided into several branches, each branch using sampling layer under different scaling of produce the characteristics of the different scales, and then the characteristics of the sampling will be use on different scales, and scaling to the same size, finally the characteristics of multi-scale feature fusion formation. The multi-scale feature extraction based on the cascaded feature extraction module firstly takes three images of different resolutions as input and extracts three features of different sizes. Then, the low-resolution feature is gradually fused with the high-resolution feature to obtain multi-scale features. These methods are all multi-scale feature extraction methods at module level. The other multi-scale feature extraction method belongs to the level of network architecture. In feature extraction network, the low-level features and high-level features are fused (high-level features have smaller resolution and need to be up-sampled by deconvolution before fusion) to ensure that the features extracted by feature extraction network are multi-scale. In the input level will be two different resolution (high resolution and low resolution image) image into two of the same architecture feature extraction in the network, respectively extracting multi-scale characteristics of two kinds of resolution, and the low resolution on the multi-scale features of sampling, and then from different resolution of the two network multi-scale characteristics of multi-scale characteristics of fusion to get rich.

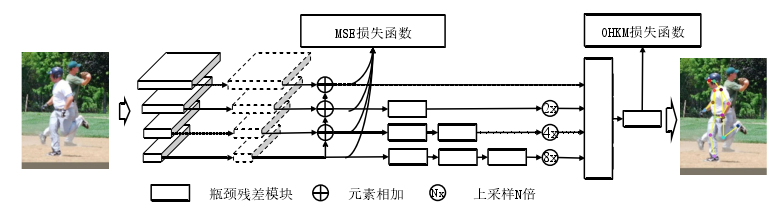

The improvement of backbone network: Complex environmental factors in human pose estimation result in the network needing to learn complex mappings, which require the use of a more powerful backbone network. How to enhance the learning ability of model from the perspective of backbone network is a very worthy problem. The architecture design of backbone network is mainly designed from the perspectives of expanding model receptive field, distinguishing difficult and easy samples, and maintaining high resolution. With the development of network architecture, especially residual network, researchers proposed a module called Hourglass and stacked several Hourglass modules to form a stacked Hourglass network. The stacked hourglass network uses intermediate supervision in the sub-network at each stage to compensate for the gradient dispersion of the network. Hourglass module is designed based on residual module. In the hourglass module, features are sampled down and up for several times, and the features with the same resolution in the process of down-sampling and up-sampling are fused. The hourglass module can not only extract multi-scale features, but also further reduce the computational load of the model while increasing the receptive field of the whole network. It is worth noting that starting from the stacked hourglass model, the subsequent backbone network began to absorb the design idea of down-sampling and up-sampling in the design of hourglass modules. The Cascaded Pose Network (CPN) designed a similar structure based on residual modules. As shown in figure 5 for the cascade pyramid network, close to the input image in figure for residual network (4 solid square, top-down), residual network aims to transform high resolution images for the characteristics of low resolution, then use multiple deconvolution layer (4 dotted square, from the bottom up) will be low resolution features gradually return to high resolution. The cascade pyramid network uses the residual network as the backbone network for downsampling, and several upsampling layers are added to the back end of the backbone network.

Figure 5. Cascading pyramid network

Although the above network structures solve the problems of multi-scale feature extraction, receptive field enlargement and gradient dispersion in the training process, information is lost due to the down-sampling and up-sampling operations used in these networks. In order to solve this problem, High Resolution Network (HRNet) is proposed. The network consists of multiple branch networks, each with features of different resolutions. Features in the topmost branch network have the highest resolution, and features in the bottom branch have the lowest resolution. Features with different resolution in different branches are fused to obtain multi-scale features. Because the top-level branch in this architecture has the highest resolution and remains the same, it can retain high-resolution information and avoid information loss.

Introduction of attention mechanism: due to the limited computing resources of human brain in the visual system, resources need to be focused on the part to be processed, and attention mechanism is a method to deal with information overload. Introducing attention mechanism into deep convolutional neural network can help the network focus on the part that needs to be processed, so as to avoid unreasonable prediction results. Therefore, the introduction of attention mechanism can avoid unreasonable human body posture prediction and improve the prediction result. Attention mechanism can be divided into two types, namely, Conditional Random Field (CRF) based attention mechanism and space-channel attention mechanism.

2.2.3 Modeling the relationship between key nodes

Considering the position relationship between joints, the explicit relationship modeling between joints can improve the human pose estimation method, and the constraint network of human body can reduce the difficulty of the task. The relationship modeling of node is mainly divided into two kinds: the relationship modeling method based on graph model and the relationship modeling method without graph model. The relationship modeling methods based on graph model can be divided into two types: Markov random field and conditional random field. If the false-positive prediction region is generated in the current predicted thermal map (the peak value of multiple regions appears in the thermal map, and the peak value not only appears in the region where the key node is located, but also in other regions), the false-positive prediction region will reduce the prediction accuracy of the model. Deep convolutional neural network is mainly used to learn the relationship between nodes in the method of modeling the relationship between nodes by non-graph model. Using tree or graph structure to model the relationship between nodes requires manual design of specific structure according to the problem and approximate calculation when solving the problem, but the chain structure can effectively avoid this problem. Similar to recursive neural networks, chain structures use deep convolutional neural networks to learn the dependencies between adjacent nodes. The method predefines the order of the predicted nodes. In the initial stage, deep convolutional neural network is used to predict the thermal map of the first juncture. In the subsequent stage, the image features and the thermal map of the last juncture predicted are used as the input of the current stage to predict the thermal map of the next defined adjacent juncture.

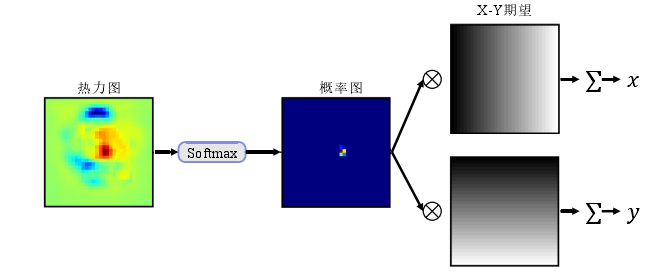

2.3 Coordinate regression method represented by thermal map

The most special method of human pose estimation based on coordinate regression is the coordinate regression method represented by thermal diagram, which retains the advantages of thermal diagram representation and coordinate regression. Because the method based on coordinate regression is to learn the direct mapping from the image to the coordinates of the joint, too much spatial information of the human body will be lost, which is not conducive to the network learning of spatial position. However, the human pose estimation method based on the thermogram regression uses the thermogram to represent the position of the junction, and the mapping relationship from the image to the thermogram is learned from the network. Therefore, the position information in the image can be fully utilized to avoid losing the spatial information in the image, and better accuracy can be obtained. However, in the reasoning stage of the method based on the thermal map, the position of the maximum active value in the thermal map is taken as the coordinate of the key node and the coordinate of the thermal map space is converted to the coordinate of the original map space by using inverse transformation. In this process, the quantization error caused by taking the position of the maximum active value will lead to a large deviation in the final coordinates of the original image space. In addition, taking the maximum activation position is not differentiable, which prevents the model from being optimized end-to-end. For this reason, researchers put forward a method to convert the thermal map into coordinates, as shown in FIG. 6. This method uses the thermal map as the intermediate representation, and uses Softmax to normalize the thermal map and convert it into a probability map, and then obtains the precise coordinates of key nodes by integrating the probability map. This improvement not only unifies the method of thermograph regression and coordinate regression, but also avoids the quantization error in the method of thermograph regression.

FIG. 6. Coordinate regression method using a thermal diagram

3. The conclusion

With the continuous development of artificial intelligence technology, based on the depth study of the human body posture estimation technology is improved, the attitude estimation precision is also significantly increased, but the current study are ignoring the practical application environment to the requirement of model reasoning efficiency (speed, high precision, small amount of calculation), the existing models cannot be applied to small embedded devices. Therefore, the main problem restricting the application of human pose estimation based on deep learning is how to ensure the accuracy of the model while improving the efficiency of model reasoning.