An introduction to classical methods of human behavior recognition in video data based on neural Networks - Dr. Wei Yao

2021-08-03

Human behavior recognition in video refers to analyzing an unknown video and automatically identifying the behavior categories of people in the video. At present, researchers have proposed a variety of behavior recognition methods, among which there are two most commonly used methods: one is the behavior recognition method based on artificial feature extraction, and the other is the behavior recognition method based on deep learning. These two methods have their own advantages and disadvantages. The method based on manual feature extraction can extract corresponding features according to specific needs, which is easy to implement. However, the recognition ability is limited by the extracted features and has certain limitations. The behavior recognition method based on deep learning can automatically learn the characteristics related to human behavior from the video data set, which has good learning ability. However, in order to train a model with good performance, a large number of video data sets are often needed, which requires high hardware configuration and takes a long time. In recent years, the recognition effect of video behavior recognition methods based on deep learning is better than that based on manual feature extraction. The behavior recognition methods based on deep learning (neural network) will be briefly introduced in the following [1].

The behavior recognition method based on deep learning directly inputs the video or pre-processed video picture frame into the network to train the required features, and finally outputs the final classification results through SVM, Softmax and other classifiers. Compared with the manual feature extraction method, the behavior recognition method based on deep learning has better generalization performance and higher recognition accuracy. Deep learning methods for behavior recognition mainly include behavior recognition method based on cyclic neural network, behavior recognition method based on 3D convolutional neural network and behavior recognition method based on two-stream convolutional neural network.

1. Behavior recognition method based on recurrent neural network

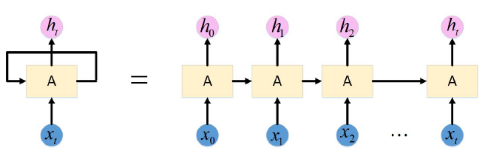

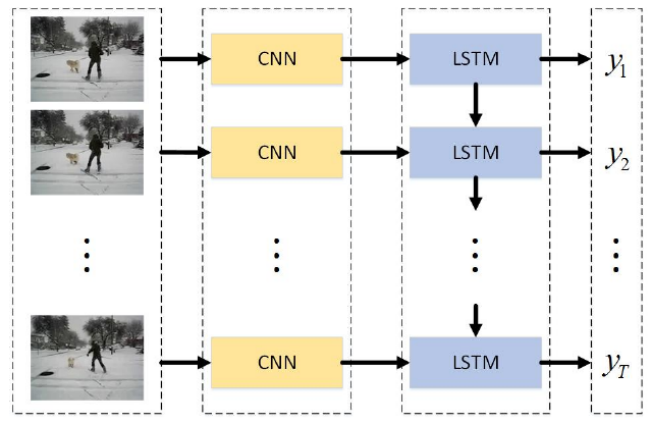

Recurrent Neural Network [2](RNN) is A Neural Network suitable for video processing and capable of processing temporal data. Its structure is shown in Figure 1. Module A in this Network reads A certain INPUT XT constantly and then outputs HT. It can be seen from the figure that RNN circulates information to the next step, ensuring that the previous information can be saved in each calculation step. The chain structure of RNN on the right can be obtained by expanding the structure on the left. The chain feature reveals that RNN is sequence-related in nature. LSTM[3](Long Short Term Memory, LSTM) is a special RNN that uses the idea of RNN to study video behavior recognition tasks. Literature [4] proposes a Long term Recurrent Convolutional network (LRCN), as shown in FIG. 2. The principle of LRCN network structure is to input every frame of video into CNN network and then into LSTM, and the output of LSTM is the final output result of the network. By connecting LRCN with the traditional convolution module, a video behavior recognition model can be trained to capture the time-state dependence. In video behavior recognition, recurrent neural network generally needs to be combined with other network framework, the computational complexity is large, the video recognition effect is relatively ordinary.

Figure 1. RNN model

Figure 2 schematic diagram of LRCN network structure

2. Behavior recognition method based on 3D convolutional neural network

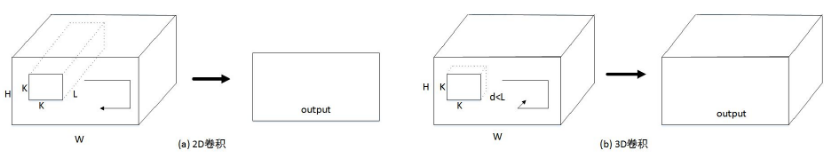

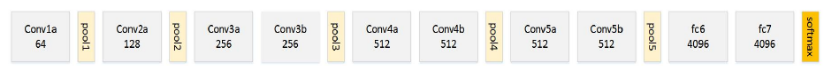

2 d convolution is usually used in image segmentation, identification, testing and other tasks, but in the field of video processing, 2 d convolution can well capture the information on the timing, as shown in figure 3, you can see by (a) figure multi-channel of 2 d convolution of the input is much frame picture, only the output characteristics of a two-dimensional figure, the characteristics of the multimodal information are compressed, The 3D convolution of FIG. (b) outputs a 3D feature map, which can capture the information on time sequence. Therefore, 3D convolution is suitable for processing tasks related to video. The Facebook team proposed a generic network C3D[5] for video analysis. The network structure is shown in Figure 4, with two full connectivity layers and a Softmax classifier at the back. C3D network for end-to-end training, direct input of video data, and then through 3D convolution operation to extract the spatio-temporal features of video data, the video time sequence information can be captured, and finally through Softmax classifier output classification results, the biggest advantage of this method is fast computing speed. The disadvantage is that the model recognition performance obtained by training medium and small video data sets is poor. In video behavior recognition, 3D convolutional network is usually end-to-end training, which requires training on a large video set. Compared with dual-stream network, 3D convolutional network has more parameters, and its biggest advantage is fast computing efficiency, but it has high requirements on computer hardware configuration.

FIG. 3 Comparison of 2D convolution and 3D convolution

Figure 4. C3D network structure

3. Behavior recognition method based on two-stream convolutional neural network

Simonyan K[6] et al. proposed the dual-stream method. The model structure is shown in Figure 5. The dual-stream network is divided into two tributaries. Features related to target motion information can be extracted. The main idea of dual-stream network is to decompose the video frame into RGB image and optical stream image by preprocessing the video, and then input the RGB image into the spatial stream network and the optical stream image into the temporal stream network. The two networks are trained jointly, and finally the classification results are obtained by fusion by direct averaging or SVM. The dual-stream method achieves good recognition results on UCF101 and HMDB51 video sets.

Figure 5 dual-stream network structure

Compared with the other two methods, the human behavior recognition method based on two-stream convolutional neural network in video data has the advantages of fewer computational parameters and low hardware configuration requirements, and the recognition performance of the model is also better.

[1] Li Shuyi. Research on Video Behavior Recognition Based on Two-stream convolutional Neural Network [D]. Guangzhou University,2020.

[2] Bengio Y, Simard P, Frasconi P. Learning long-term dependencies with gradient descent is difficult[J].IEEE Transactions on Neural Networks, 2002, 5 (2) : 157-166.

[3] Joe Yue-Hei Ng, M. Hausknecht, S. Vijayanarasimhan, O. Vinyals, R. Monga and G. Toderici. Beyondshort snippets: Deep networks for video classification[C]// IEEE Conference on Computer Vision andPattern Recognition (CVPR). IEEE, 2015:4694-4702.

[4] J. Donahue et al. Long-term recurrent convolutional networks for visual recognition anddescription[C]// IEEE Conference on Computer Vision and Pattern Recognition (CVPR). IEEE,2015:2625-2634.

[5] Du T, Bourdev L, Fergus R, et al. Learning Spatiotemporal Features with 3D ConvolutionalNetworks[C]// IEEE International Conference on Computer Vision. IEEE, 2015:4489-4497.

[6] Simonyan K, Zisserman A. Two-Stream Convolutional Networks for Action Recognition in Videos[J]. Neural Information Processing Systems, 2014, 1(4):568-576.