5G Network facilitates XR-edge Computing and XR video intelligent transcoding -- Yang Junchao

2020-11-05

1. The introduction

In the past two years, 5G network has been successively implemented and commercialized in the world. However, the process of 5G network promotion and implementation is not plain sailing. In terms of ordinary consumers, the demand for 5G network has not been fully aroused, and the advantages of 4G network have not been fully reflected, lacking the guidance and stimulation of typical applications. On the operator side, there is still no typical "killer" application to promote the implementation and construction of 5G network (for example, mobile payment and small video application in the ERA of 4G greatly promote the promotion and implementation of 4G network). Obviously, at present, XR (VR/AR) is the most promising and typical application in the market to promote the implementation and development of 5G network.

Current XR (especially VR) content is mainly local content the content of the broadcast or cable transmission is given priority to, on the one hand, due to the limitation of the current HMD hardware rendering capabilities, most of the VR video video rendering in computer capable of rendering first, and then through the high-definition multimedia interface (high definition multimedia interface, HDMI) wire transfer to the HMD for playback, this limits the application of VR scene in certain degree. In addition, at present, mobile VR devices in the market use smart phones for VR video rendering, which achieves the wireless effect of HMD. However, smart phones' rendering processing power and insufficient resolution also affect users' experience. Currently XR video transmission only can satisfy the user the basic experience of XR, from high quality XR experience anytime and anywhere there is huge gap between the industry application of demand, it restricts the development and popularization of XR, undoubtedly resolving real-time wireless transmission of XR is the key to meet XR mobility, especially with the popularization and application of 5 g, how to use the 5 g network support XR development is a hot spot in current industry attention.

The three major application scenarios for 5G in the future are enhanced Mobile Broadband (eMBB), Massive Machine type communication (mMTC), and ultra-reliable low-latencyCommunications uRLLC. And wireless, mixed reality of virtual reality, augmented reality as a very special application, at the same time for large and super reliable bandwidth, low latency, have high requirements: under the constraint condition of low latency (VR delay MTP asked 20 milliseconds, AR latency requirements in 5 milliseconds), thousands of megabit per second (Gigabits) data is sent to a user terminal. As we all know, low latency and high reliability are two contradictory requirements. Super reliability requires more resources allocated to users to ensure the transmission success rate, but this leads to increased latency for other users. Obviously, to achieve wireless VR/AR connectivity requires intelligent network design to meet reliable, low latency, and seamless support for different network scenarios.

2. XR content transmission based on cache transcoding mechanism based on 5G mobile edge computing

Mobile-edge Computing (MEC) has been the focus of research in recent years. It makes it possible for users to unload tasks with high computational load through deployment closer to users. In the process of XR video transmission, due to the limited power and computing capacity of the user terminal, unloading decoding, rendering and other high computational tasks to MEC is a feasible solution to solve the lightweight problem of XR head-mounted display. At the same time, how to use meC-based content cache and transcoding mechanism for adaptive transmission to solve the problem of low latency is an important research direction of XR content transmission.

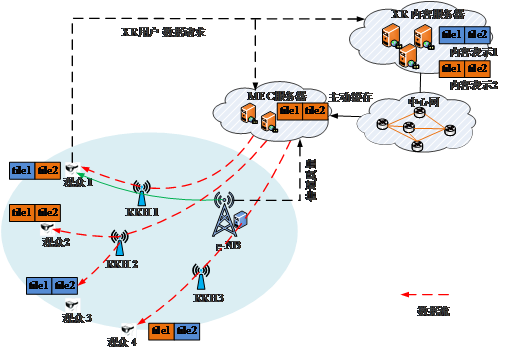

As shown in the figure, we proposed the XR content transmission system schematic diagram based on the cache transcoding mechanism of 5G mobile edge computing, which mainly includes three parts: XR content server, Mobile-Edge Computing (MEC) server and 5G heterogeneous cloud Access Network (H-CRAN). The specific description of the system is as follows:

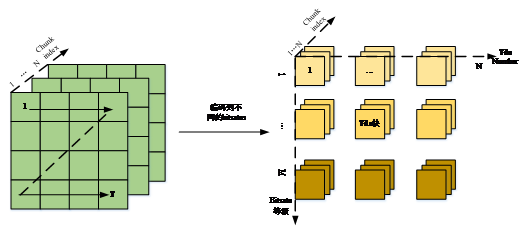

XR content server is mainly responsible for storing VR video after compressed encoding. Based on the compression coding of tiles, the XR video is spatially segmented into independently decoded tiles, whose index is represented as. In general, in a content server, each Tile generates multiple versions of the bit rate for adaptive transmission, represented by, where, and represents the smallest and largest compressed bit rate of the T Tile, respectively. Each Tile code stream is segmented into chunks in the time granularity, and the Tile based compression coding diagram is as follows.

The mobile edge computing server is located on the edge of h-CRAN and is capable of advanced XR content caching and real-time transcoding. When a user terminal in the H-CRAN range requests XR video content, the requested data is returned from the mobile edge computing server. In this case, all tiles of the XR video have only the maximum bit rate version cached to the mobile edge computing server. Real-time transcoding module in moving edge calculation provides users with appropriate Tile version through real-time transcoding module, that is, select appropriate quantization parameters (QPs) for transcoding and then transmit.

MEC is used for advance content caching and real-time transcoding to push content to MEC servers closer to user terminals, thereby reducing end-to-end latency. H-cran considers several microbase stations to be deployed within the range of a macro station, which is connected to a centralized baseband pool via forward backhaul and backhaul, respectively. The remote RF unit serves only as the RF transceiver and is responsible for basic RF functions. Baseband pool is responsible for upper layer protocol and baseband processing. The system adopts software-defined network technology to support the separation of control information and data, that is, the control information is transmitted through G-NB giant station, and the data is transmitted through remote Radio Heads (RRH) close to the user.

3. The conclusion

The ultimate goal of the XR is to make it impossible to tell the boundary between the synthetic virtual world and the real world, and enabling people to move freely without wired connections is an important step towards this ultimate goal. Obviously, caching transcoding mechanism based on MEC can significantly improve XR user experience and promote the great development of XR. In the future 5G network, when multiple XR content is requested by multiple users, it will be challenging to make use of limited MEC storage space and computing power for reasonable content caching and transcoding, which is also an important direction of our future research.

reference

[1] Y. He, S. Gudumasu, E. Asbun. Segment scheduling method for reducing 360 video streaming latency[C]// Applications of Digital Image Processing XL. San Diego, USA, 2017: 32.

[2] L. Battle, R. Chang, M. Stonebraker. Dynamic Prefetching of Data Tiles for Interactive Visualization[J]. 2016: 1363-1375.

[3] Y. Liu, J. Liu, A. Argyriou, S. Ci. MEC-Assisted Panoramic VR Video Streaming Over Millimeter Wave Mobile Networks[J]. IEEE Transactions on Multimedia, 2018, 21(5): 1302-1316

[4] F. Duanmu, E. Kurdoglu, Y. Liu. View direction and bandwidth adaptive 360 degree video streaming using a two-tier system[C]// IEEE International Symposium on Circuits and Systems. Baltimore, MD, USA, IEEE, 2017: 1-4.

[5] X. Zhang, R. Yu, Y. Zhang, Y Gao. Energy-efficient multimedia transmissions through base station cooperation over heterogeneous cellular networks exploiting user behavior[J]. IEEE Wireless Communications. 2014, 21(4): 54-61.

[6] J. Chakareski. Vr/Ar Immersive Communication: Caching, Edge Computing, and Transmission Trade-Offs[C]. 2017 ACM Workshop on Virtual Reality and Augmented Reality Network. Los Angeles, CA, USA, 2017: 36-41.

[7] C. Ozcinar, A D. Abreu, S. Knoor. Estimation of optimal encoding ladders for tiled 360 VR video in adaptive streaming systems[C]// The IEEE International Symposium on Multimedia. San Jose, CA, USA, IEEE, 2017.

H. Ma, K G. Shin. Multicast Video-on-Demand Services [J]. ACM SIGCOMM Computer Communication Review, 2002, 32(1):31 -- 43.

[9] M. Yu, H. Lakshman, B. Girod. A framework to evaluate omnidi-rectional video coding schemes[C]. 2015 IEEE International Symposium on Mixed and Augmented Reality (ISMAR). Fukuoka, Japan, 2015:31-36.

[10] A. Zare, K. K. Sreedhar, V. K. M. Vadakital. HEVC-compliant viewport-adaptive streaming of stereoscopic panoramic video[C]// Picture Coding Symposium. Nuremberg, Germany, IEEE, 2017:1-5.